SMHS LinearModeling

Contents

Scientific Methods for Health Sciences - Linear Modeling

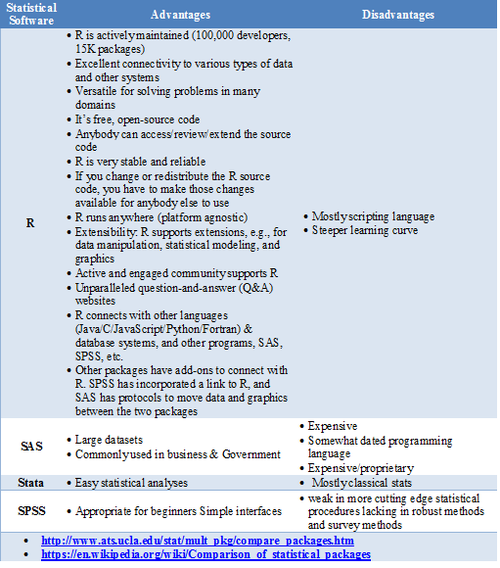

Statistical Software- Pros/Cons Comparison

GoogleScholar Research Article Pubs

| Year | R | SAS | SPSS |

|---|---|---|---|

| 1995 | 8 | 8620 | 6450 |

| 1996 | 2 | 8670 | 7600 |

| 1997 | 6 | 10100 | 9930 |

| 1998 | 13 | 10900 | 14300 |

| 1999 | 26 | 12500 | 24300 |

| 2000 | 51 | 16800 | 42300 |

| 2001 | 133 | 22700 | 68400 |

| 2002 | 286 | 28100 | 88400 |

| 2003 | 627 | 40300 | 78600 |

| 2004 | 1180 | 51400 | 137000 |

| 2005 | 2180 | 58500 | 147000 |

| 2006 | 3430 | 64400 | 142000 |

| 2007 | 5060 | 62700 | 131000 |

| 2008 | 6960 | 59800 | 116000 |

| 2009 | 9220 | 52800 | 61400 |

| 2010 | 11300 | 43000 | 44500 |

| 2011 | 14600 | 32100 | 32000 |

require(ggplot2)

require(reshape)

Data_R_SAS_SPSS_Pubs <-read.csv('https://umich.instructure.com/files/522067/download?download_frd=1', header=T)

df <- data.frame(Data_R_SAS_SPSS_Pubs)

# convert to long format

df <- melt(df , id.vars = 'Year', variable.name = 'Time')

ggplot(data=df, aes(x=Year, y=value, colour=variable, group = variable)) + geom_line() + geom_line(size=4) + labs(x='Year', y='Citations')

Quality Control

Questions:

- Is the data what it’s supposed to be (does it represent the study cohort/population)?

- How to inspect the quality of the data?

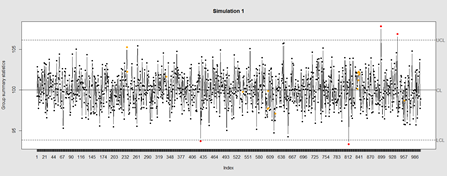

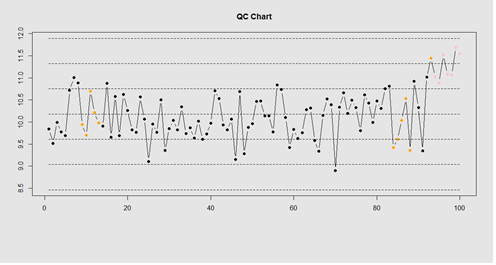

Data Quality Control (QC) and Quality Assurance (QA) represent important components of all modeling, analytics and visualization that precede all subsequent data processing steps. QC and QA may be performed manually or automatically. Statistical quality control involves quantitative methods for monitoring and controlling a process or data derived from observing a natural phenomenon. For example, is there evidence in the plots below of a change in the mean of these processes?

# simulate data with base value of 100 w/ normally distributed error

# install.packages("qcc")

library(qcc)

demo.data.1 <- rep(100, 1000) + rnorm(1000, mean=0, sd=2)

qcc(demo.data.1, type="xbar.one", center=100, add.stats=FALSE,

title="Simulation 1", xlab="Index")

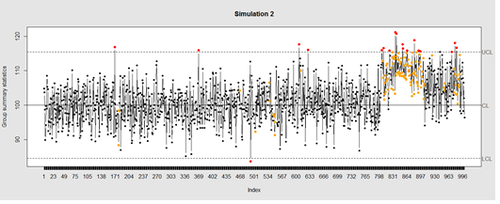

Now let’s introduce a trend

# first 800 points have base value of 100 w/ normally distributed error, # next 100 points have base value of 105 w/ normally distributed error # last 100 points have base value of 110 w/ normally distributed error M <- 110 SD=5 demo.data.2 <- c(rep(100, 800), rep(M, 100), rep(100+(M-100)/2, 100)) + rnorm(1000, mean=0, sd=SD) qcc(demo.data.2, type="xbar.one", center=100, add.stats=FALSE, title="Simulation 2", xlab="Index")

Our goal is to use statistical quality control to automatically identify issues with the data. The qcc package in R provides methods for statistical quality control – given the data, it identifies candidate points as outliers based on the Shewhart Rules. Color-coding the data also helps point out irregular points.

The Shewhart control charts rules (cf. 1930’s) are based on monitoring events that unlikely when the controlled process is stable. Incidences of such atypical events are alarm signals suggesting that stability of the process may be compromised and the process is changed.

An instance of such an unlikely event is the situation when the upper/lower control limits (UCL or LCL) are exceeded. UCL and LCL are constructed as ±3 limits, indicating that the process is under control within them. Additional warning limits (LWL and UWL) are constructed at ±2 or ±. Other rules specifying events having low probability when the process is under control can be constructed:

1. One point exceeds LCL/UCL.

2. Nine points above/below the central line.

3. Six consecutive points show increasing/decreasing trend.

4. Difference of consecutive values alternates in sign for fourteen points.

5. Two out of three points exceed LWL or UWL limits.

6. Four out of five points are above/below the central line and exceed ± limit.

7. Fifteen points are within ± limits.

8. Eight consecutive values are beyond ± limits.

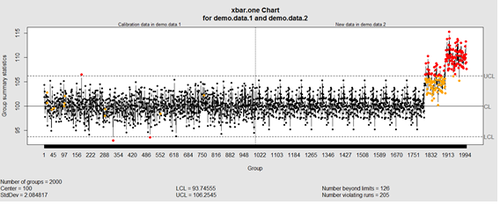

We can define training/testing dataset within qcc by adding the data we want to calibrate it with as the first parameter (demo.data.1), followed by the new data (demo.data.2) representing the test data.

#example using holdout/test sets demo.data.1 <- rep(100, 1000) + rnorm(1000, mean=0, sd=2) demo.data.2 <- c(rep(100, 800), rep(105, 100), rep(110, 100)) + rnorm(100, mean=0, sd=2) MyQC <- qcc(demo.data.1, newdata=demo.data.2, type="xbar.one", center=100, add.stats=FALSE, title="Simulation 1 vs. 2", xlab="Index") plot(MyQC) # , chart.all=FALSE)

# add warning limits at 2 std. deviations

MyQC2 <- qcc(demo.data.1, newdata=demo.data.2, type="xbar.one", center=100, add.stats=FALSE, title="Second Simulation 1 vs. 2", xlab="Index")

warn.limits <- limits.xbar(MyQC2$\$$center, MyQC2$\$$std.dev, MyQC2$\$$sizes, 0.95)

plot(MyQC2, restore.par = FALSE)

abline(h = warn.limits, lty = 2, lwd=2, col = "blue")

## limits.xbar(center, std.dev, sizes, conf)

Center = sample/group center statistic

Sizes= samples sizes.

std.dev= within group standard deviation.

Conf= a numeric value used to compute control limits, specifying the number of standard deviations (if conf > 1) or the confidence level (if 0 < conf < 1)

[[Image:SMHS_LinearModeling_Fig006.png|500px]]

Natural processes may have errors that are non-normally distributed. However, using (appropriate) transformations we can often normalize the errors.

We can use thresholds to define zones in the data where each zone represents, say, one standard deviation span of the range of the dataset.

find_zones <- function(x) {

x.mean <- mean(x)

x.sd <- sd(x)

boundaries <- seq(-3, 3)

# creates a set of zones for each point in x

zones <- sapply(boundaries, function(i) {

i * rep(x.sd, length(x))

})

zones + x.mean

}

head(find_zones(demo.data.2))

evaluate_zones <- function(x) {

zones <- find_zones(x)

colnames(zones) <- paste("zone", -3:3, sep="_")

x.zones <- rowSums(x > zones) - 3

x.zones

}

evaluate_zones(demo.data.2)

find_violations <- function(x.zones, i) {

values <- x.zones[max(i-8, 1):i]

# rule4 <- ifelse(any(values > 0), 1,

rule4 <- ifelse(all(values > 0), 1,

ifelse(all(values < 0), -1,

0))

values <- x.zones[max(i-5, 1):i]

rule3 <- ifelse(sum(values >= 2) >= 2, 1,

ifelse(sum(values <= -2) >= 2, -1,

0))

values <- x.zones[max(i-3, 1):i]

rule2 <- ifelse(mean(values >= 3) >= 1, 1,

ifelse(mean(values <= -3) >= 1, -1,

0))

#values <- x.zones[]

values <- x.zones[max(i-3, 1):i]

rule1 <- ifelse(any(values > 2), 1,

ifelse(any(values < -2), -1,

0))

c("rule1"=rule1, "rule2"=rule2, "rule3"=rule3, "rule4"=rule4)

}

find_violations(evaluate_zones(demo.data.2), 20)

Now we can compute the rules for each point and assign a color to any violations.

library("plyr")

compute_violations <- function(x, start=1) {

x.zones <- evaluate_zones(x)

results <- ldply (start:length(x), function(i) {

find_violations(x.zones, i)

})

results$\$$color <- ifelse(results$\$$rule1!=0, "pink",

ifelse(results$\$$rule2!=0, "red",

ifelse(results$\$$rule3!=0, "orange",

ifelse(results$\$$rule4!=0, "yellow",

"black"))))

results

}

tail(compute_violations(demo.data.2))

Now let’s make a quality control chart.

plot.qcc <- function(x, holdout) {

my.qcc <- compute_violations(x, length(x) - holdout)

bands <- find_zones(x)

plot.data <- x[(length(x) - holdout):length(x)]

plot(plot.data, col= my.qcc$\$$color, type='b', pch=19,

ylim=c(min(bands), max(bands)),

main="QC Chart",

xlab="", ylab="")

for (i in 1:7) {

lines(bands[,i], col= my.qcc$\$$color[i], lwd=0.75, lty=2)

}

}

demo.data.4 <- c(rep(10, 90), rep(11, 10)) + rnorm(100, mean=0, sd=0.5) plot.qcc (demo.data.4, 100)

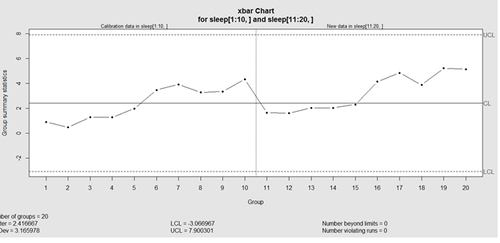

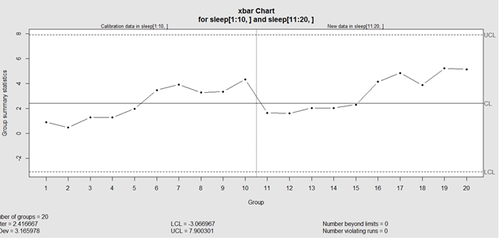

Let’s use the “Student's Sleep Data” (sleep) data.

library("qcc")

attach(sleep)

q <- qcc.groups(extra, group)

dim(q)

obj_avg_test_1_2 <- qcc(q[1:2,], type="xbar")

obj_avg_test_1_5 <- qcc(q[,1:5], type="xbar")

summary(obj_avg_test_1_5)

obj_avg_test_train <- qcc(q[,1:5], type="xbar", newdata=q[,6:10])

# How is this different from this? obj_avg_new <- qcc(q[,1:10], type="xbar")

This control chart has the solid horizontal line (center), the upper and lower control limits (dashed lines), and the sample group statistics (e.g., mean) are drawn as a piece-wise line connecting the points. The bottom of the plot includes summary statistics and the number of points beyond control limits and the number of violating runs. If the process is “in-control”, we can use estimated limits for monitoring prospective (new) data sampled from the same process/protocol. For instance,

obj_test_1_10_train_11_20 <- qcc(sleep[1:10,], type="xbar", newdata=sleep[11:20,])

plots the X chart for training and testing (11-20) sleep data where the statistics and the control limits are based on the first 10 (training) samples.

Now try (range) QCC plot obj_R <- qcc(q[,1:5], type="R", newdata=q[,6:10])

A control chart aims to enhance our ability to monitor and track a process proxied by the data. When special causes of variation (random or not) are present the data may be considered “out of control”. Corresponding action may need to be taken to identify, control for, or eliminate such causes. A process is declared to be “controlled” if the plot of all data points are randomly spread out within the control limits. These Lower and Upper Control Limits (LCL, UCL) are usually computed as ±3σ from the center (e.g., mean). This QCC default limits can be changed using the argument nsigmas or by specifying the confidence level via the confidence level argument.

When the process is governed by a Gaussian distribution the ±3σ limits correspond to a two-tails probability of p=0.0027.

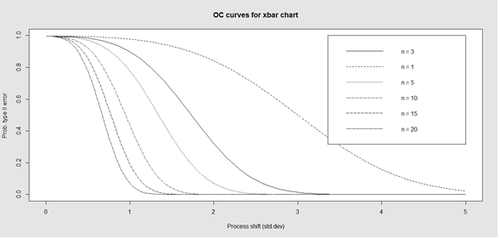

Finally, an operating characteristic (OC) curve shows the probability of not detecting a shift in the process (false-negative, type II error), i.e., the probability of erroneously accepting a process as being “in control”, when in fact, it’s out of control.

# par(mfrow=c(1,1)) oc.curves(obj_test_1_10_train_11_20) # oc.curves(obj_test_1_10_train_11_20, identify=TRUE) # to manually identify specific OC points

The function oc.curves returns a matrix or a vector of probabilities values representing the type II errors for different sample-sizes. See help(oc.curves), e.g., identify=TRUE, which allows to interactively identify values on the plot, for all options.

Notice that the OC curve is “S”-shaped. As expected, this is because as the percent of non-conforming values increases, the probability of acceptance decreases. A small sub-sample, instead of inspecting the entire data, may be used to determine the quality of a process. We can accept the data as in-control as long as the process percent nonconforming is below a predefined level.

Quality Control

Questions:

- Are there (linear) associations between predictors and a response variable(s)?

- When to look for linear relations and what assumptions play role?

Let’s use some of the data included in the Appendix. Snapshots of the first few rows in the data are shown below:

Eyeballing the data may suggest that Race “2” subjects may have higher “Weights” (first dataset), or “Treatment A” yields higher “Observations” (second dataset). However this cursory observation may be incorrect as we may be looking at a small/exceptional sub-sample of the entire population. Data-driven linear modeling allows us to quantify such patterns and compute probability values expressing the strength of the evidence in the data to make such conclusions. In a nutshell, we express relationships of interest (e.g., weight as a function of race, or Observation as a function of treatment) using a linear function as an analytical representation of these inter-variable relations:

Weight ~ Race, Weight ~ Genotype, Obs ~ Treatment, Obs ~ Day, etc.

W = a +b*R,

This “~” (tilde) notation implies “Weight predicted by Race” or “Observation as a linear function of Treatment”. The “dependent variable” (a measurable response) on the left is predicted by the factor on the right acting as an “independent variable”, co-variate, predictor, “explanatory variable”, or “fixed effect”.

Often times inter-dependencies may not be perfect, deterministic, or rigid (like in the case of predicting the Area of a disk knowing its radius, or predicting the 3D spatial location of a planet having a precise date and time). Weight may not completely and uniquely determined by Race alone, as many different factors play role (genetics, environment, aging, etc.) Even if we can measure all factors we can identify as potentially influencing Weight, there will still be intrinsic random variability into the observed Weight measures, which can’t be control for. This intrinsic random variation may be captured and accounted for using “random” term at the end.

Weight ~ Race + ε ~ D(m=0, s).

Epsilon “ε” represent the error term of predicting Weight by Gender alone and summarize the aggregate impact of all factors aside from Race that impact individual’s Weight, which are experimentally uncontrollable or random. This formula a schematic analytic representation of a linear model that we’re going to estimate, quantify and use for prediction and inference. The right hand side splits the knowledge representation of Weight into 2 complementary components - a “fixed effect” for Race, which we understand and expect, and a “random effect” (“ε”) what we don’t know well. (W ~ R represents the “structural” or “systematic” part of the linear model and “ε” stands for the “random” or “probabilistic” part of the model.

R Experiment (See Appendix)

mydata1 <- data.frame(

Subject = c(13, 14, 15, 16, 17, 18),

Day = c("Day1", "Day1", "Day1", "Day2", "Day2", "Day2"),

Treatment = c("B", "B", "B", "A", "A", "A"),

Obs = c(6.472687, 7.017110, 6.200715, 6.613928, 6.829968, 7.387583)

)

We construct an R frame object concatenating 3 data-elements for 6 subjects, and saving it into “mydata1."

| Subject | Day | Treatment | Obs |

| 13 | Day1 | B | 6.472687 |

| 14 | Day1 | B | 7.017110 |

| 15 | Day1 | B | 6.200715 |

| 16 | Day2 | A | 6.613928 |

| 17 | Day2 | A | 6.829968 |

| 18 | Day2 | A | 7.387583 |

Using the linear model Obs ~ Treatment + ε, we can invoke the linear modeling function lm() . The “ε” term is implicit in all models so it need not be specified.

lm.1 <- lm(Obs ~ Treatment, mydata1)

The assignment operator “<-” stores the linear model result in object lm.1. For these data (the data object is “mydata1”), this model expresses Obs as a function of Treatment. To inspect the result of the linear model use the “summarize” function summary():

AIC(lm.1); BIC(lm.1)

summary(lm.1)

The result is:

Call:

lm(formula = Obs ~ Treatment, data = mydata1)

Residuals:

1 2 3 4 5 6

-0.10342 0.44100 -0.37540 0.03782 -0.27881 0.27881

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 7.1088 0.2507 28.350 9.21e-06 ***

TreatmentB -0.5327 0.3071 -1.734 0.158

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.3546 on 4 degrees of freedom

Multiple R-squared: 0.4293, Adjusted R-squared: 0.2866

F-statistic: 3.008 on 1 and 4 DF, p-value: 0.1579

The report shows:

- The model analytical formula specified by the lm() call.

- The residuals (errors, discrepancies between observed and model-predicted outcomes).

- The coefficients of the fixed effects (predictors, explanatory variables).

- And finally, the overall model quality (describing the ability of the model to describe the linear relations in these data). “Multiple R-squared” refers to the R2 statistic that measures the “variance explained by the model” or “variance accounted for by the model”. 0≤R2≤1, in our result, R2 = 0.4293, which is good, but not great. In essence, 42.93% of the data variability may be explained by this specific (best linear fit) model. In this case, the model solely relies on “Treatment” (fixed effect) to explain Obs (outcome). So, the R2 reflects how much of the Obs variance is accounted for by different Treatments (A or B).

Models with high R2 values are preferred subject to 2 conditions:

- What is considered a high R2 value is relative and depends on study/application.

- When the study phenomenon is highly deterministic, R2 values can be approach 1.

Higher number of explanatory variable and higher model-complexity tend to yield higher R2 values, but are more difficult to interpret.

The “Adjusted R-squared” value is a modified R2 value normalizes the total variance “explained” by the model by the number of fixed effects included in the model. Larger number of predictors will lower the “Adjusted R-squared”. The adjusted R2adj = 0.2866, but in general, R2adj can be much lower when the model includes many fixed effects.

The statistical test of “model significance” is quantified by the output “F-statistic: 3.008 on 1 and 4 DF, p-value: 0.1579”. Under a null hypothesis where the model captures little or no information about the process, the probability value quantifies the data-driven evidence to reject the null and accept an alternative stating that this model does capture useful patterns in the data. Specifically, the p-value represents the conditional probability under the condition that the null hypothesis is true. In this case,

- The null hypothesis is Ho: “Treatment has no effect on Obs”.

- Alternative research Hypothesis is H1: “Treatment has effect on Obs”.

This p-value is 0.1579, and the linear model fails to reject the null hypothesis. This leaves open the possibility that Treatment may have no effect on Obs (just based on using these 6 data points).

However, if the p-value was much smaller, and assuming Ho were true, then this data would be less likely to be observed in reality (hence we would interpret small p-values as showing that the alternative hypothesis “Treatment affects Obs” as more likely and the model result is “statistically significant”).

There is a distinction between the overall “model-significance” (as quantified by the p-value at the very bottom of the output, which considers all effects together) and the p-value of individual effects (coefficients table including significance for each predictor). The model F‐statistic and the degrees of freedom are in 1-1 correspondence with the p-value – the latter is computed as the right tail probability for an F(df1,df2) distribution corresponding to the F-statistics (critical value). To report the overall model inference in this case, we can state:

“Using a simple linear model of Obs as a function of Treatment, the model was not significant (F(1,4)=3.001, p>0.15), which indicates that “Treatment” may not be a critical explanatory factor describing the “Obs” outcome.”

To examine the coefficient table for (lm.1 model).

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 7.1088 0.2507 28.350 9.21e-06 ***

TreatmentB -0.5327 0.3071 -1.734 0.158

The overall model p-value (0.1579) is similar to the p‐value quantifying the significance of the “Treatment” factor to explain Obs. This is because the model had only one fixed effect (“Treatment” itself). So, the significance of the overall model is the same as the significance for this coefficient (subject to rounding error).

In the presence of multiple fixed effects, the overall model significance will be different from the significance of each coefficient corresponding to a specific covariate.

Why does he report shows “TreatmentB” not “Treatment”? The estimate of the “(Intercept)” is 7.1088. Let’s look at the mean Obs values within each of the 2 Treatment groups (A and B):

# Model: lm.1 <- lm(Obs ~ Treatment, mydata1)

mean(mydata1[mydata1$\$$Treatment=="A",]$\$$Obs)

> 7.108776

mean(mydata1[mydata1$\$$Treatment=="B",]$\$$Obs)

> 6.57611

# in general, subsetting can be accomplished by:

subset_data <- mydata1[ which(mydata1$\$$Treatment=='A' & mydata1$\$$Day=='Day2'),]

The mean of the Obs values for {Treatment=A} is the same as the “Intercept” term.

The estimate for “TreatmentB” is negative (-0.5327). Note that:

7.108776 - 0.5327 = 6.57611,

which is the mean of the “TreatmentB” cohort. Thus, the estimate for “(Intercept)” represents for the “TreatmentA” category, and the estimate for “TreatmentB” represents the difference between the “Treatment” “A” and “B” categories

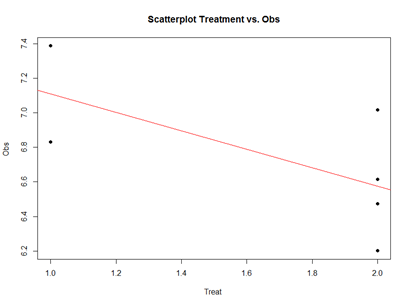

Analytically, the linear models represent “linear” associations, as in the plot below:

mydata2 <- data.frame(

Subject = c(13, 14, 15, 16, 17, 18), Day = c("Day1", "Day1", "Day1", "Day1", "Day1", "Day1"),

Treatment = c(2, 2, 2, 2, 1, 1),

Obs = c(6.472687, 7.017110, 6.200715, 6.613928, 6.829968, 7.387583)

)

plot(mydata2$Treatment, mydata2$Obs, main="Scatterplot Treatment vs. Obs",

xlab="Treatment", ylab="Obs ", pch=19)

# Add fit lines

abline(lm(mydata2$Obs~mydata2$Treatment), col="red")

The linear model represents the difference between Treatments “A” and “B” as a slope. Going From Treatment “A” to “B” we go down -0.5327 (in terms of the units measuring the “Obs” variable), which is exactly the “TreatmentB” coefficient, relative to Treatment “A”.

Treatment “A” acts as baseline (center of the local coordinate system) and is represented by the “(Intercept)”. The difference between Treatments “A” and “B” is expressed as a slope heading down from 7.108776 by 0.5327. The p-values in the coefficient table correspond to the significance that each coefficient (intercept or Treatment) is “non-trivial”.

In our case, the Intercept is significant (10-5), but the Treatment change (from A to B) is not (0.15). By default, the lm() function takes lexicographical ordering to determine which level (A or B) of the predictor (Treatment) comes first to label Treatment “A” as the intercept and “B” as the slope. Categorical differences like Treatment “A” and “B” can be expressed as slopes because difference between two categories is directly correlated with the slope between the two categories.

The advantage of representing difference between two categories as lines crossing the two categories is that it allows us to use the same computational principles for numeric and categorical variables. That is the same interpretations can be made for Treatment as for continuous (e.g., age) or discrete (e.g., dosage) covariates.

.....

....

- SOCR Home page: http://www.socr.umich.edu

Translate this page: