SMHS ReliabilityValidity

Contents

[hide]Scientific Methods for Health Sciences - Measurement Reliability and Validity

Overview

Reliability and validity are two of the most commonly used criteria in choosing the ideal measurement. Reliability is the overall consistency of a measure that is the ability to produce similar results under consistent conditions. Validity is the extent to which a measurement is accurately to reflect the real fact that is the extent to which the measurement measures what it claims to measure. In the perfect situation, we would expect the measurement to be reliable and valid, though it is not always achievable. In fact, in many cases, we need to strike a balance between reliability and validity based on our objectives of the study in choosing the ideal measurement. In this section, we are going to discuss about the measurement reliability and validity and illustrate their application with examples and we are going to focus on the application of these two criteria in the field of epidemiology as an example.

Motivation

In choosing an ideal measurement in the study, we are always encountered with the problem of whether it is capable of producing the similar results with consistent conditions and whether it is capable to measure what it claims to measure. Ideally, we would prefer to be able to measure the exact situation and to produce similar results when measured repeatedly in consistent conditions. In real studies, we would need to choose between measurements to strike a balance between these two aspects given the restrictions in real world. So, how do we choose between validity and reliability? How these two would influence the results of the test?

Theory

3.1) Measurement: refers to the systematic, replicable process through which the objects are quantified or classified with respect to a particular dimension and is usually achieved by assigning numerical values to the objects measured.

- There are four levels of measurements (the relationship among the values assigned to the attributes for a variable): (1) Nominal measure: the numerical values just ‘name’ the attributes uniquely and no ordering of the cases is implied; (2) Ordinal measure: where the attributes can be rank-ordered while the distances between attributes don’t have any meaning. For example, the education background of the participants are measured in a study where 0=less than high school; 1=some high school; 2=high school degree; 3=some college; 4=college degree; 5=post college. (3) Interval measure: where the distance is meaningful in the measurement. For example, the temperature of the participants. (4) Ratio measure: an absolute zero is meaningful meaning that you can construct a meaningful fraction with a ratio variable.

- Variation in a repeated measure can be caused by (1) pure chance or unsystematic events caused by subject, observer, situations, instrument or data processing; (2) systematic inconsistency; (3) actual change in the underlying event being measured.

- Validity of a measure is the extent to which the measurement can describe or quantify what it intends to measure; reliability of a measure is the extent to which a measure can be depended upon to secure consistent results in repeated application.

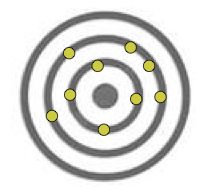

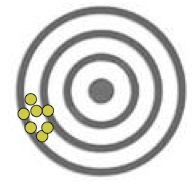

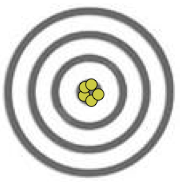

The following charts shows the three possible outcomes: (from left to right) valid not reliable, reliable not valid and valid and reliable.

3.2) Validity: validity is the extent to which the assessment measures what it is supposed to measure while reliability is the ability to replicate results on same sample if test if repeated. Within validity, we don’t always expect the measurements to produce the similar results in repeated tests. Similarly, a measure may not be valid within reliability.

There are different types of validity:

- Construct validity: refers to the extent to which the operation actually measures what the theory intends to. It involves the empirical and theoretical support for the interpretation of the measure. (1) Convergent validity: refers to the extent to which a measure is correlated with other measures that it is theoretically correlated to; (2) Discriminant validity: refers to whether the measurement is supposed to be unrelated are unrelated.

- Content validity: refers to the non-statistical type of validity, which is to test the extent to which the content of the test matches the content associated with the construct. (1) Representation validity: the extent to which an abstract theoretical construct can be turned into a specific practical test; (2) Face validity: test whether the test appears to measure a certain criterion.

- Criterion validity: involves the correlation between the test and a criterion variable taken as representative of the construct and compares the test with other measures or outcomes. (1) Concurrent validity: refers to the extent to which the operation correlates with other measures with the same construct measured at the same time; (2) Predictive validity: refers to the extent to which the operation can predict other measures of the same construct measured at the same time.

- Experimental validity: validity of design of experimental research studies. (1) statistical conclusion validity: the extent to which conclusions about the relationship among variables based on the data are correct or reasonable, it involves ensuring the use of adequate sampling procedures, appropriate statistical tests and reliable measurement procedures; (2) internal validity: estimate the extent to which conclusions about causal relationships be made; (3) external validity: concerns the extent to which the results of the study can be held to be true in general case.

3.3) Reliability (repeatability) of tests: can the results be replicated if the test is redone? The results may be influenced by three factors: (1) Intrasubject variation: variation within individual subjects; (2) Intraobserver variation: variation in reading of results by the same reader; (3) Interobserver variation: variation between those reading results.

- Types of Reliability: (1) Test-retest reliability: measure of reliability obtained by administering the same test twice over a period of time to a group of individuals; (2) Parallel forms reliability: measure of reliability obtained by administering different versions of an assessment tool to the same group of individuals; (3) Inter-rater reliability: measure of reliability used to assess the extent to which different judges or raters agree in their assessment decisions; (4) Internal consistency reliability: measure of reliability used to evaluate the extent to which different test items that probe the same construct produce similar results.

3.4) Kappa statistic: Answers the question of ‘How much better is the agreement between observers than would be expected by chance alone?’ $Kappa=\frac{(\% \, agreement\, observed)-(\% \,agreement\,expected\,by\, chance\, alone)} {100\%-(\% \, agreement\, expected\, by\, chance\, alone)}$

$Percent\,agreement=\frac{number\,in\,cells\,that\,'agree'}{Total\,number\, readings}*100$ Calculation of Kappa:

| Reader 1 | |||

| Reader 2 | Positive | Negative | |

| Positive | 180 | 40 | |

| Negative | 50 | 230 | |

- Percent agreement: (1) proportion of tests reader 1 rate as positive $=\frac{180+50}{500}=46\%$; proportion of tests reader 1 rate as negative =54%; if the results from reader 1 and reader 2 are independent, then reader 1 should have 46% positives regardless of reader 2’s scores.

- Expected agreement based on chance alone: for 220 times reader 2 is positive, we expect reader 1 will be positive 46% of the time; for 280 times reader 2 is negative, we expect reader 1 will be negative 54% of the time:

| Reader 1 | |||

| Reader 2 | Positive | Negative | |

| Positive | 101 | 119 | |

| Negative | 129 | 151 | |

- Expected agreement by chance $=\frac{101+151}{500}=50.4%.$

- Observed agreement = 82%; expected agreement based on chance = 50.4%.

$Kappa=\frac{(\% \, agreement\, observed)-(\% \,agreement\,expected\,by\, chance\, alone)} {100\%-(\% \, agreement\, expected\, by\, chance\, alone)}=\frac{82\% -50.4\% }{100\% -50.4\%}=63.71\%$

Interpretation of Kappa: > 0.75 excellent; 0.4 – 0.75 intermediate to good; < 0.40 poor reliability.

Applications

This article presents a comprehensive introduction to measurements, validity and reliability and illustrate the concepts and application with examples. It is very well developed and would be a great introduction to the material we are going to cover in this lecture.

This article presents a general introduction to reliability, validity and generalizability and studied on various problems with measurement. It gives comprehensive analysis of reliability and validity with definitions, different ways to measure reliability and validity as well as problems associated with these characteristics. This would be a great start to get to know measurement reliability and validity.

This article assessed the reliability and validity of the Childhood Trauma Questionnaire (CTQ), a retrospective measure of child abuse and neglect. 286drug- or alcohol-dependent patients (aged 24–68 years) were given the CTQ as part of a larger test battery, and 40 of these patients were given the questionnaire again after an interval of 2–6 months. 68 Ss were also given the Childhood Trauma Interview. Principal-components analysis of responses on the CTQ yielded 4 rotated orthogonal factors: physical and emotional abuse, emotional neglect, sexual abuse, and physical neglect. The CTQ demonstrated high internal consistency and good test-retest reliability over an interval of 2–6 months. The CTQ also demonstrated convergence with the Childhood Trauma Interview indicating that Ss' reports of child abuse and neglect based on the CTQ were highly stable, both over time and across types of instruments.

Software

- SOCR Cronbach's alpha calculator webapp (coming up) ...

Problems

6.1) In public health practice, optimizing the validity of tests is important in order to:

a. reduce health care costs

b. reduce unnecessary stress for patients

c. Be able to identify opportunities for intervention early in the course of disease

d. all of the above

6.2) The Kappa statistic is used to measure ___ of a test?

a. sensitivity

b. reliability

c. positive predictive value

d. specificity

6.3) Randomization of treatment groups ensures the study’s external validity.

a. True

b. False

6.4) In a study investigating whether a new serum-based screening test for pancreatic cancer allowed for earlier detection than traditional tests, the researchers found that those who enrolled in the study were more likely to have a family history of pancreatic cancer than those who did not. This characteristic of the study population affects the study’s:

a. Internal validity

b. External validity

c. Both

d. Neither

6.5) As sample size increases:

a. Sampling variability increases and the chance of selecting an unrepresentative sample increases

b. Sampling variability increases and the chance of selecting an unrepresentative sample decreases

c. Sampling variability decreases and the chance of selecting an unrepresentative sample decreases

d. Sampling variability decreases and the chance of selecting an unrepresentative sample increases