Difference between revisions of "SMHS SurvivalAnalysis"

(→Hazard Ratios) |

(→Theory) |

||

| (85 intermediate revisions by 2 users not shown) | |||

| Line 2: | Line 2: | ||

===Overview=== | ===Overview=== | ||

| − | Survival analysis is statistical methods for analyzing longitudinal data on the occurrence of events. Events may include death, injury, onset of illness, recovery from illness (binary variables) or transition above or below the clinical threshold of a meaningful continuous variable (e.g. [http://en.wikipedia.org/wiki/CD4 CD4 counts]). Typically, survival analysis accommodates data from randomized clinical | + | Survival analysis is statistical methods for analyzing longitudinal data on the occurrence of events. Events may include death, injury, onset of illness, recovery from illness (binary variables) or transition above or below the clinical threshold of a meaningful continuous variable (e.g. [http://en.wikipedia.org/wiki/CD4 CD4 counts]). Typically, survival analysis accommodates data from randomized clinical trials or cohort study designs. In this section, we will present a general introduction to survival analysis including terminology and data structure, survival/hazard functions, parametric versus semi-parametric regression techniques and introduction to (non-parametric) [http://en.wikipedia.org/wiki/Kaplan%E2%80%93Meier_estimator Kaplan-Meier methods]. Code examples are also included showing the applications survival analysis in practical studies. |

===Motivation=== | ===Motivation=== | ||

| Line 8: | Line 8: | ||

===Theory=== | ===Theory=== | ||

| + | |||

| + | '''Risk''' is the expected value of an undesirable outcome. To estimate the risk, we combine (weight-average) the probabilities of various possible events (outcomes) and some assessment of the corresponding harm into a single value, the risk. For a simple binary possibility of ''accident'' vs. ''no-accident'' situation, the Risk = (probability of the accident occurring) \(\times \) (expected loss in case of the accident). For instance, probability of accident for certain sport activity may be equal to \(0.001\), which would be associated with a loss of \(\$ 10,000\) healthcare costs, then total risk of playing the sport is a loss of \(0.001\times \$10,000=\$10\). | ||

| + | |||

| + | In general, when there are multiple types of negative outcomes (e.g., types of potential injuries), the total risk is the weighted-average of all different accidents (outcomes): | ||

| + | $$Risk = \sum_{\text{For all accidents}} {(\text{probability of the accident occurring}) \times (\text{expected loss in case of the accident})}.$$ | ||

| + | |||

| + | For example, in certain clinical decision making, treatment X may lead to an adverse event A with probability of \(0.01\), which is associated with a (monetary) loss of \(\$1,000\), and a different probability of \(0.000001\) of an adverse event of type B, associated with a loss of \(\$200,000\), then the total risk (loss expectancy) would be: | ||

| + | $$Risk= (0.01\times \$1,000) + (0.000001\times \$200,000) = \$10.2.$$ | ||

| + | |||

| + | ====Survival function==== | ||

| + | The '''survival function''', is defined as | ||

| + | \(S(t) = P(T > t), \) where \(t\) is time, \(T\) is a random variable denoting the time of event (e.g., death), and \(P\) is the probability function. Hence, the survival function is the probability that the time of event is bigger than some specified time \(t\). It is common to assume \(S(0) = 1\), as the possibility of immediate death is unlikely, most of the time. If \(u \ge t\), then knowing that \(T > u\) yields \(T > t\), e.g., the survival function is decreasing. Thus, \(S(t) \ge S(u)\) for all \(u \ge t\) -- for all subjects, survival to a later age is only possible if subjects have survived all previous younger ages. The survival function usually tends to zero as \(T\) (age) increases. | ||

| + | |||

====Hazard Ratios==== | ====Hazard Ratios==== | ||

| − | Hazard is the slope of the survival | + | Hazard is the slope of the survival function, which measures of how rapidly critical events occur (e.g., subjects are dying). |

* The hazard ratio compares two treatments. If the hazard ratio is 2.0, then the rate of deaths in one treatment group is twice the rate in the other group. | * The hazard ratio compares two treatments. If the hazard ratio is 2.0, then the rate of deaths in one treatment group is twice the rate in the other group. | ||

* The hazard ratio is not computed at any one time point, but is computed from all the data in the survival curve. | * The hazard ratio is not computed at any one time point, but is computed from all the data in the survival curve. | ||

| Line 15: | Line 28: | ||

* If the hazard ratio is not consistent over time, the value reported for the hazard ratio may not be useful. If two survival curves cross, the hazard ratios are certainly not consistent (unless they cross at late time points, when there are few subjects still being followed so there is a lot of uncertainty in the true position of the survival curves). | * If the hazard ratio is not consistent over time, the value reported for the hazard ratio may not be useful. If two survival curves cross, the hazard ratios are certainly not consistent (unless they cross at late time points, when there are few subjects still being followed so there is a lot of uncertainty in the true position of the survival curves). | ||

* The hazard ratio is not directly related to the ratio of median survival times. A hazard ratio of 2.0 does not mean that the median survival time is doubled (or halved). A hazard ratio of 2.0 means a patient in one treatment group who has not died (or progressed, or whatever end point is tracked) at a certain time point has twice the probability of having died (or progressed...) by the next time point compared to a patient in the other treatment group. | * The hazard ratio is not directly related to the ratio of median survival times. A hazard ratio of 2.0 does not mean that the median survival time is doubled (or halved). A hazard ratio of 2.0 means a patient in one treatment group who has not died (or progressed, or whatever end point is tracked) at a certain time point has twice the probability of having died (or progressed...) by the next time point compared to a patient in the other treatment group. | ||

| − | * Hazard ratios, and their confidence intervals, may be computed using two methods, each reporting both the hazard ratio and its reciprocal. If people in group A die at twice the rate of people in group B (HR=2.0), then people in group B die at half the rate of people in group A (HR=0.5). | + | * Hazard ratios, and their confidence intervals, may be computed using two methods (see below LogRank and Mantel-Haenszel), each reporting both the hazard ratio and its reciprocal. If people in group A die at twice the rate of people in group B (HR=2.0), then people in group B die at half the rate of people in group A (HR=0.5). |

| − | + | ||

| − | + | ====The LogRank and Mantel-Haenszel methods for computing hazard ratios==== | |

| − | : | + | Both, the LogRank and Mantel-Haenszel methods usually give nearly identical results, unless in situations when several subjects die at the same time or when the hazard ratio is far from 1.0. |

| − | + | =====The Mantel-Haenszel method===== | |

| − | + | The Mantel-Haenszel method reports hazard ratios that are further from 1.0 (so the reported hazard ratio is too large when the hazard ratio is greater than 1.0, and too small when the hazard ratio is less than 1.0): | |

| − | + | # Compute the [http://www.biostat.au.dk/teaching/survival/F2004/survival-2004-1.pdf total variance, V]; | |

| − | + | # Compute \(K = \frac{O1 - E1}{V}\), where \(O1\) - is the total ''observed'' number of events in group1, \(E1\) is the total ''expected'' number of events in group1. You'd get the same value of \(K\) if you used the other group; | |

| − | + | # The hazard ratio equals \(e^K\); | |

| − | + | # The 95% confidence interval of the hazard ratio is: (\(e^{K - \frac{1.96}{\sqrt{V}}}$, $e^{K + \frac{1.96}{\sqrt{V}}}\)). | |

| − | + | ||

| − | + | =====The LogRank method===== | |

| + | The LogRank method is also referred to as O/E method and reports values that are closer to 1.0 than the true Hazard Ratio, especially when the hazard ratio is large or the sample size is large. When there are ties, both methods are less accurate. The logrank methods tend to report hazard ratios that are even closer to 1.0 (so the reported hazard ratio is too small when the hazard ratio is greater than 1.0, and too large when the hazard ratio is less than 1.0): | ||

| + | # As part of the Kaplan-Meier calculations, compute the number of observed events (deaths, usually) in each group ($O_a$ and $O_b$), and the number of expected events assuming a null hypothesis of no difference in survival (\(E_a\) and \(E_b\)); | ||

| + | # The hazard ratio then is: \(HR= \frac{\frac{O_a}{E_a}}{\frac{O_b}{E_b}}\); | ||

| + | # The standard error of the natural logarithm of the hazard ratio is: \(\ln{HR}=\sqrt{\frac{1}{E_a} + \frac{1}{E_b}}\); | ||

| + | # The lower and upper limits of the 95% confidence interval of the hazard ratio are: \(e^{\big\{ \ln(O_a)-\ln(E_a) \pm 1.96 \sqrt{\frac{1}{E_a} + \frac{1}{E_b}}\big\}}\). Recall that \(HR=\frac{O_a E_b}{O_b E_a}\), and under the null hypothesis \(E_a=E_b\), so \(\ln(HR)= \ln(O_a)-\ln(E_a).\) | ||

====Survival analysis goals==== | ====Survival analysis goals==== | ||

| − | * Estimate time-to-event for a group of individuals, such as time until second heart-attack for a group of MI patients; | + | * Estimate time-to-event for a group of individuals, such as time until second heart-attack for a group of '''Myocardial Infarction (MI)''' patients; |

| − | * To compare time-to-event between two or more groups, such as treated vs. placebo | + | * To compare time-to-event between two or more groups, such as treated vs. placebo MI patients in a randomized controlled trial; |

* To assess the relationship of co-variables to time-to-event, such as: does weight, insulin resistance, or cholesterol influence survival time of MI patients? | * To assess the relationship of co-variables to time-to-event, such as: does weight, insulin resistance, or cholesterol influence survival time of MI patients? | ||

====Terminology==== | ====Terminology==== | ||

| − | * '''Time-to-event''': The time from entry into a study until a subject has a particular outcome | + | * '''Time-to-event''': The time from entry into a study until a subject has a particular outcome. |

| − | * '''Censoring''': Subjects are said to be censored if they are lost to follow up or drop out of the study, or if the study ends before they die or have another outcome of interest. They are (censored) counted as alive or disease-free for the time they were enrolled in the study. If dropout is related to both outcome and treatment, dropouts may bias the results. | + | * '''Censoring''': Subjects are said to be censored if they are lost to follow up or drop out of the study, or if the study ends before they die or have another outcome of interest. They are (censored) counted as alive or disease-free for the time they were enrolled in the study. If dropout is related to both outcome and treatment, dropouts may bias the results. At any given time, the variable ''censor'' indicates whether the subject continues with the study (censor=1), but typically, each $c_i$ is paired with a failure time $T_i$ indicating the patient died (event occurred). If a patient is lost to follow up, $c_i=0$, indicating the patient had no record at time $T_i$, i.e., a right-censored failure time. |

| + | |||

* '''Two-variable outcome''': time variable: $t_i$ = time of last disease-free observation or time at event; censoring variable: $c_i=1$ if had the event; $c_i =0$ no event by time $t_i$. | * '''Two-variable outcome''': time variable: $t_i$ = time of last disease-free observation or time at event; censoring variable: $c_i=1$ if had the event; $c_i =0$ no event by time $t_i$. | ||

* Right Censoring ($T>t$): Common examples: termination of the study; death due to a cause that is not the event of interest; loss to follow-up. We know that subject survived at least to time $t$. | * Right Censoring ($T>t$): Common examples: termination of the study; death due to a cause that is not the event of interest; loss to follow-up. We know that subject survived at least to time $t$. | ||

| Line 50: | Line 69: | ||

* Parametric survival analysis is based on ''Waiting Time'' distributions (e.g., [[AP_Statistics_Curriculum_2007_Exponential|exponential probability distribution]]); | * Parametric survival analysis is based on ''Waiting Time'' distributions (e.g., [[AP_Statistics_Curriculum_2007_Exponential|exponential probability distribution]]); | ||

* Assume that times-to-event for individuals in a dataset follow a continuous probability distribution (for which we may have an analytic mathematical representation or not). For all possible times $T_i$ after baseline, there is a certain probability that an individual will have an event at exactly time $T_i$. For example, human beings have a certain probability of dying at ages 1, 26, 86, 100, denoted by $P(T=1)$, $P(T=26)$, $P(T=86)$, $P(T=100)$, respectively, and these probabilities are obviously vastly different from one another; | * Assume that times-to-event for individuals in a dataset follow a continuous probability distribution (for which we may have an analytic mathematical representation or not). For all possible times $T_i$ after baseline, there is a certain probability that an individual will have an event at exactly time $T_i$. For example, human beings have a certain probability of dying at ages 1, 26, 86, 100, denoted by $P(T=1)$, $P(T=26)$, $P(T=86)$, $P(T=100)$, respectively, and these probabilities are obviously vastly different from one another; | ||

| − | * Probability density function f(t): In the case of human longevity, $T_i$ is unlikely to follow a unimodal normal distribution, because the probability of death is not highest in the middle ages, but at the beginning and end of life (bimodal?). The probability of the failure time occurring at exactly time $t$ (out of the whole range of possible $t$’s) is: $f(t)= | + | * Probability density function f(t): In the case of human longevity, $T_i$ is unlikely to follow a unimodal normal distribution, because the probability of death is not highest in the middle ages, but at the beginning and end of life (bimodal?). The probability of the failure time occurring at exactly time $t$ (out of the whole range of possible $t$’s) is: $f(t)=\lim_{∆t→0}\frac{P(t≤T<t+∆t)}{∆t}$. |

| − | * Example: Suppose we have the following hypothetical data (Figure below). People have a high chance of dying in their 70’s and 80’s, but they have a smaller chance of dying in their 90’s and 100’s, because few people make it long enough to die at these ages. | + | * Example: Suppose we have the following hypothetical data (Figure below). People have a high chance of dying in their 70’s and 80’s, but they have a smaller chance of dying in their 90’s and 100’s, because few people make it long enough to die at these ages. |

| + | |||

| + | <center> | ||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Ages||Frequencies||CDF(age)||S(age) | ||

| + | |- | ||

| + | |0-12||15||0.145631068||0.854368932 | ||

| + | |- | ||

| + | |16-26||4||0.184466019||0.815533981 | ||

| + | |- | ||

| + | |30-50||5||0.233009709||0.766990291 | ||

| + | |- | ||

| + | |62-78||20||0.427184466||0.572815534 | ||

| + | |- | ||

| + | |80-90||50||0.912621359||0.087378641 | ||

| + | |- | ||

| + | |92-110||9||1||0 | ||

| + | |} | ||

| + | </center> | ||

| + | |||

<center>[[Image:SMHS_SurvivalAnalysis_Fig3.png|500px]]</center> | <center>[[Image:SMHS_SurvivalAnalysis_Fig3.png|500px]]</center> | ||

| + | * The Survival function, $S(t)=1-F(t)$: The goal of survival analysis is to estimate and compare survival experiences of different groups. Survival experience is described by the cumulative survival function: $S(t)=1-P(T≤t)=1-F(t)$, where $F(t)$ is the [[SMHS_ProbabilityDistributions#Theory|CDF]] of $f(t)$. The Figure below shows the cumulative survival for the same hypothetical data, plotted as cumulative distribution rather than density. | ||

| + | <center>[[Image:SMHS_SurvivalAnalysis_Fig4.png|500px]]</center> | ||

| + | |||

| + | Try to use real-data and the [[SOCR_EduMaterials_AnalysisActivities_Survival|SOCR Survival Analysis]] and the [http://www.socr.ucla.edu/htmls/ana/Survival_Analysis.html SOCR Survival Java Applet] to generate a similar plot. | ||

| + | |||

| + | * Hazard function represents the probability that if you survive to $t$, you will succumb to the event in the next instant. | ||

| + | $$h(t)=\lim_{∆t→0} \frac{P(t≤T<t+∆t|T≥t)}{∆t}.$$ | ||

| + | |||

| + | : The Hazard function may also be expressed in terms of density and survival functions: $h(t)=\frac{f(t)}{S(t)}$. This is because of the [[SMHS_Probability#Theory|Bayesian rule]]: | ||

| + | $$h(t)dt = P(t≤T<t+dt|T≥t)=\frac{P(t≤T<t+dt \cap T≥t)}{P(T≥t)}=$$ | ||

| + | $$\frac{P(t≤T<t+dt)}{P(T≥t)} =\frac{f(t)dt}{S(t)}.$$ | ||

| + | |||

| + | : The Figure below illustrates an example plot of a hazard function depicting the hazard rate as an instantaneous incidence rate. | ||

| + | <center>[[Image:SMHS_SurvivalAnalysis_Fig5.png|500px]]</center> | ||

| + | |||

| + | ====Examples==== | ||

| + | * For uncensored data, the following failure time are observed for n=10 subjects. For convenience, the failure times have been ordered. Calculate $\hat{S}(20)$. | ||

| + | <center> | ||

| + | {| class="wikitable" style="text-align:center; width:45%" border="1" | ||

| + | |- | ||

| + | ! i||1||2||3||4||5||6||7||8||9||10 | ||

| + | |- | ||

| + | | $T_i$||2||5||8||12||15||21||25||29||30||34 | ||

| + | |} | ||

| + | </center> | ||

| + | : $\hat{S}(t)=\frac{1}{n}\sum_{i=1}^n{I(T_i>t)}$, where the indicator (or characteristic) function $I(A)$ is defined by: | ||

| + | $$I(A)= \begin{cases} | ||

| + | 1, & \text{A is true} \\ | ||

| + | 0, & \text{if A is false} | ||

| + | \end{cases}.$$ | ||

| + | Thus, $\hat{S}(20)= P(T>20) = 1-P(T\leq 20) \equiv \frac{1}{10} (0+0+0+0+0+1+1+1+1+1)$. | ||

| + | $$\hat{S}(20)=0.5.$$ | ||

| + | |||

| + | * For censored data ($c_i$ represents the censoring indicator), suppose the observed data were: | ||

| + | <center> | ||

| + | {| class="wikitable" style="text-align:center; width:45%" border="1" | ||

| + | |- | ||

| + | ! i||1||2||3||4||5||6||7||8||9||10 | ||

| + | |- | ||

| + | | $T_i$||2||5||8||12||15||21||25||29||30||34 | ||

| + | |- | ||

| + | | $c_i$||1||0||1||0||1||0||1||0||1||0 | ||

| + | |} | ||

| + | </center> | ||

| + | $$\hat{h}(2)=\frac{P(2\leq T < 3|T\geq 2)}{1} =\frac{P(2\leq T < 3)}{P(T\geq 2)}=\frac{1/10}{1}=0.1,$$ | ||

| + | $$\hat{h}(8)=\frac{P(8\leq T < 9|T\geq 8)}{1} =\frac{P(8\leq T < 9)}{P(T\geq 8)}=\frac{1/10}{8/10}= 0.125,$$ | ||

| + | $$\hat{h}(15)=\frac{P(15\leq T < 16|T\geq 15)}{1} =\frac{P(15\leq T < 16)}{P(T\geq 15)}=\frac{1/10}{6/10}=0.166.$$ | ||

| + | |||

| + | * Cumulative Hazard function is defined as $Λ(t)=\int_0^t{h(u)du}$. There is an important connection between cumulative hazard and survival: $S(t)=e^{-Λ(t)}$, although, the cumulative hazard function may be hard to interpret. | ||

| + | : Properties: | ||

| + | ::(1) $Λ(t)≥0$; | ||

| + | ::(2) $\lim_{t→∞}{Λ(t)}=\infty$; | ||

| + | ::(3) $Λ(0)=0$. | ||

| + | |||

| + | * ''Hazard vs. density'' example: at birth, each person has a certain probability of dying at any age; that’s the probability density (cf. ''marginal probability''). For example: a girl born today may have a 2% chance of dying at the age of 80 years. However, if a person survives for a while, the probabilities of prospective survival change (cf. ''conditional probability''). For example, a woman who is 79 today may have a 6% chance of dying at the age of 80. The figure bellow gives a set of possible probability density, failure, survival and hazard function. $F(t)$=cumulative failure=$1-e^{-t^{1.7}}$; $f(t)$=density function=$1.7 t^{0.7} e^{-t^{1.7}}$; $S(t)$=cumulative survival=$e^{-t^{1.7}}$; $h(t)$=hazard function=$1.7t^{0.7}$. | ||

| + | |||

| + | <center>[[Image:SMHS_SurvivalAnalysis_Fig6.png|500px]]</center> | ||

| + | |||

| + | # R plotting example | ||

| + | op <- par(mfrow=c(2,2)) ## configure the 2x2 plotting canvas | ||

| + | plot(x, 1.7*x^(0.7)* exp(-x*1.7), xlab = "x-values", ylab = "f(x)", type = "l", main="Probability Density") | ||

| + | plot(x, 1- exp(-x*1.7), xlab = "x-values", ylab = "F(x)", type = "l", main="Cumulative Distribution") | ||

| + | plot(x, exp(-x*1.7), xlab = "x-values", ylab = "S(x)", type = "l", main="Survival") | ||

| + | plot(x, 1.7*x^(0.7), xlab = "x-values", ylab = "h(x)", type = "l", main="Hazard") | ||

| + | |||

| + | ====Common density functions describing survival probability==== | ||

| + | * [[AP_Statistics_Curriculum_2007_Exponential| Exponential]] (hazard is constant over time, simplest model!): constant hazard function $h(t)=h$; exponential density functions: $P(T=t)=f(t)=he^{-ht}$; survival function $P(T>t)=S(t)=\int_t^{\infty} {he^{-hu} du}=-e^{-hu} |_t^{\infty}=e^{-ht}$. | ||

| + | :: See the [http://www.socr.ucla.edu/htmls/dist/Exponential_Distribution.html SOCR Exponential Distribution Calculator] | ||

| + | :: See the [http://www.distributome.org/V3/calc/ExponentialCalculator.html Probability Distributome Exponential Calculator] | ||

| + | |||

| + | * Example: $h(t)=0.1$ cases/person-year; Then | ||

| + | :: the probability of developing disease at year 10 is: $P(t=10)=0.01e^{-0.1*10}=0.01e^{-0.1}=0.009$; | ||

| + | :: the probability of surviving past year 10 is: $S(t)=e^{-0.01t}=90.5%$; | ||

| + | :: the cumulative risk through year 10 is 9.5% (complement of $P(T>10)$). | ||

| + | |||

| + | * Weibull (hazard function is increasing or decreasing over time) | ||

| + | :: See the [http://www.socr.ucla.edu/htmls/dist/Weibull_Distribution.html SOCR Weibull Distribution Calculator] | ||

| + | :: See the [http://www.distributome.org/V3/calc/WeibullCalculator.html Probability Distributome Weibull Calculator] | ||

| + | |||

| + | ====Mathematical formulation/relations==== | ||

| + | * Hazard from density and survival: $h(t)=\frac{f(t)}{S(t)}$ | ||

| + | * Survival from density: $S(t)=\int_t^{\infty} {f(u)du}$ | ||

| + | * Density from survival: $f(t)=-\frac{dS(t)}{dt}=S(t)h(t)$ | ||

| + | * Density from hazard: $f(t)=h(t) e^{-\int_0^t {h(u)du}}$ | ||

| + | * Survival from hazard: $S(t)=e^{-\int_0^t {h(u)du}}$ | ||

| + | * Hazard from survival: $h(t)=-\frac{d}{dt} \ln{S(t)}$ | ||

| + | * Cumulative hazard from survival: $Λ(t)=-\log{S(t)}$ | ||

| + | * Life expectancy (mean survival time): $E[T]=\int_0^{\infty} {tf(t)dt}=\int_0^{\infty} {S(t)}$, which is the area under the survival curve | ||

| + | * Restricted mean lifetime: $E[T∧L]=\int_0^L {S(t)dt}$ | ||

| + | * Mean residual lifetime: $m(t_0)=E[T-t_0|T>t_0]=\int_{t_0}^{\infty}{\frac{(t-t_0)f(t)dt}{S(t_0)}}=\int_{t_0}^{\infty} {\frac{S(t)dt}{S(t_0)}}$, set $t_0=0$ to obtain E[T]. | ||

| + | |||

| + | ====Hazard function models==== | ||

| + | * Parametric multivariate regression techniques: model the underlying hazard/survival function; assume that the dependent variable (time-to-event) takes on some known distribution, such as Weibull, exponential, or lognormal; estimates parameters of these distributions (e.g., baseline hazard function); estimates covariate-adjusted hazard ratios (a hazard ratio is a ratio of hazard rates); many times we care more about comparing groups than about estimating absolute survival. The model of parametric regression: components include a baseline hazard function (which may change over time) and a linear function of a set of k fixed covariates that when exponentiated gives the relative risk. | ||

| + | |||

| + | : ''Exponential'' model assumes fixed baseline hazard that we can estimate: with exponential distribution, $S(t)=λ e^{-λt}$, model applied: $\log{h_i(t)}=μ+β_1 x_{i1}+⋯+β_k x_{ik}$; | ||

| + | |||

| + | : ''Weibull'' model models the baseline hazard as a function of time: two parameters of shape ($\gamma$) and scale ($λ$) must be estimated to describe the underlying hazard function over time. With Weibull distribution: $S(t)=e^{-λt^{\gamma}}$, model applied: $\log {h_i(t)}= μ+α\log{t}+β_1 x_{i1}+⋯+β_k x_{ik}$; | ||

| + | |||

| + | : ''Cox regression'' (semi-parametric): Cox models the effect of predictors and covariates on the hazard rate but leaves the baseline hazard rate unspecified; also called proportional hazards regression; does not assume knowledge of absolute risk; estimates relative rather than absolute risk. | ||

| + | :: Components: a baseline hazard function that is left unspecified but must be positive (equal to the hazard when all covariates are 0); a linear function of a set of $k$ fixed covariates that is exponentiated (equal to the relative risk). | ||

| + | :: $\log{h_i(t)}= \log{h_0 (t)}+β_1 x_{i1}+⋯+β_k x_{ik}$; $h_i (t)=h_0(t) e^{β_1 x_{i1}+⋯+β_k x_{ik}}$. | ||

| + | :: The point is to compare the hazard rates of individuals who have different covariates: hence, called ''Proportional hazards'': $HR=\frac{h_1 (t)}{h_2 (t)}=\frac{h_0 (t) e^{βx_1 }}{h_0 (t) e^{βx_2}}=e^{β(x_1-x_2)}$, hazard functions should be strictly parallel. | ||

| + | |||

| + | :: Kaplan-Meier Estimates (non-parametric estimate of the survival function): no a priori math assumptions (either about the underlying hazard function or about proportional hazards); simply, the empirical probability of surviving past certain times in the sample (taking into account censoring); non-parametric estimate of the survival function; commonly used to describe survivorship of study population/s; commonly used to compare two study populations; intuitive graphical presentation. | ||

| + | |||

| + | : Limit of Kaplan-Meier: this approach is mainly descriptive, doesn’t control for covariates, requires categorical predictors and can’t accommodate time-dependent variables. | ||

| + | |||

| + | : With Kaplan-Meier: | ||

| + | :: For uncensored data: $\hat{S}(t)=\frac{1}{n}\sum_{i=1}^n{I(T_i>t)}$, where $I(A)=1$ if $A$ is true and 0 otherwise. | ||

| + | :: For censored data, to estimate $\hat{S}(t)$ we use [[SMHS_Probability#Theory |product rule from probability theory]]: $P(A\cap B)=P(A)*P(B)$ if $A$ and $B$ are independent. In survival analysis, intervals are defined by failures. e.g., $P(\text{surviving intervals 1 and 2})=$ $P(\text{surviving interval 1})\times P(\text{surviving interval 2 } | \text{ survived interval 1})$. For instance, | ||

| + | |||

| + | <center> | ||

| + | {| class="wikitable" style="text-align:center; width:45%" border="1" | ||

| + | |- | ||

| + | ! i||1||2||3||4||5 | ||

| + | |- | ||

| + | | $T_i$||4||6||7||12||12 | ||

| + | |- | ||

| + | | $c_i$||1||0||1||0||0 | ||

| + | |} | ||

| + | </center> | ||

| + | |||

| + | The second subject was lost to follow up, and cases 4 and 5 were alive after 12 months. The remaining 2 cases (1 and 3) died at times 4 and 7 months, respectively. To estimate the likelihood of a patient to survive 1 year (12 months) we break the 12 months into intervals anchored at points of dying (in this case 4 and 7 months): | ||

| + | $$ S(12) = P(\text{surviving intervals [0,4] and (4,7] and and (7,12]})=$$ | ||

| + | $$P(T>4)\times P(T>7|T>4)\times P(T>12|T>7)=$$ | ||

| + | $P(\text{surviving interval [0,4]})\times$ $P(\text{surviving interval (4,7]}|\text{survived interval [0,4]})$ $\times P(\text{surviving interval (7,12]}|\text{survived interval [0,7]})$ | ||

| + | |||

| + | :: Note the single censored case at time 6 months! $P(\text{surviving intervals [0,4] and (4,7] and (7,12]})= 4/5 \times 2/3 \times 2/2= 0.53$, as 4 of the five cases (2, 3, 4,5) make it beyond time 4; 2 out of the 3 cases (2, 4 and 5) that survived the 4th month survived to the 7th month, and 2 out of the 2 cases (4 and 5) known to have survived beyond the 7th month lived past 12th month. Thus, $ S(12)=0.53$ which is between the probability of surviving 12 months = 40% (we end with 2 survivors at the end) and 60% (3/5) (only 2 are known to have died during the year). | ||

| + | |||

| + | <center>[[Image:SMHS_SurvivalAnalysis_Fig1.c.png|500px]]</center> | ||

| + | |||

| + | ====Example==== | ||

| + | <center> | ||

| + | {| class="wikitable" style="text-align:center; width:45%" border="1" | ||

| + | |- | ||

| + | ! i||1||2||3||4||5||6||7||8||9||10 | ||

| + | |- | ||

| + | | $T_i$||2||5||8||12||15||21||25||29||30||34 | ||

| + | |- | ||

| + | | $c_i$||1||0||1||0||1||0||1||0||1||0 | ||

| + | |} | ||

| + | </center> | ||

| + | * To estimate $S(10)$: $S(10)=P(T>10)=P(T>10,T>8,T>5,T>2)=$ $P(T>10│T>8)P(T>8│T>5)P(T>5│T>2)P(T>2)=$ $7/7 * 7/8 * 9/9 * 9/10=0.7875$. So, $\hat{S}_{KM}(10)=0.7875$. | ||

| + | |||

| + | * To estimate $S(20)$: $S(20)=P(T>20)=P(T>20,T>15,T>10,T>8,T>5,T>2)=$ $P(T>20│T>15)P(T>15│T>10)P(T>10│T>8)P(T>8│T>5)P(T>5│T>2)P(T>2)=$ $6/6 * 5/7 * 7/7 * 7/8 * 9/9 * 9/10=0.5625$. So, $\hat{S}_{KM}(20)=0.5625$. | ||

| + | |||

| + | <center>[[Image:SMHS_SurvivalAnalysis_Fig1.d.png|500px]]</center> | ||

| + | |||

| + | ===Applications=== | ||

| + | * [[SOCR_EduMaterials_AnalysisActivities_Survival| The SOCR Survival Activity]] presents an example on survival analysis using the Kaplan-Meyer Method. This example is based on a dataset from “Modern applied statistics with S”, which contains two types of treatment groups denoted by 6-MP and control. | ||

| + | |||

| + | * [http://www.stefvanbuuren.nl/publications/Multiple%20imputation%20-%20Stat%20Med%201999.pdf This study] investigated a non-response problem in survival analysis where the occurrence of missing data in the risk factor is related to mortality. The available data in the study suggest that the process that created the missing data depends jointly on survival and the unknown blood pressure, thereby distorting the relation of interest. Multiple imputations are used to impute missing blood pressure and then analyze the data under a variety of non-response models. One special modeling problem is treated in detail; the construction of a predictive model for drawing imputations if the number of variables is large. Risk estimates for these data appear robust to even large departures from the simplest non-response model, and are similar to those derived under deletion of the incomplete records. | ||

| + | |||

| + | ===Software === | ||

| + | * SOCR Tools: [http://www.socr.umich.edu/html/mod Modeler], [http://www.socr.ucla.edu/htmls/ana/Survival_Analysis.html SUrvival Analysis Java Applet] | ||

| + | * UCLA R-Survival R code example: [http://www.ats.ucla.edu/stat/r/examples/asa/asa_ch2_r.htm Chapter 2], [http://www.ats.ucla.edu/stat/sas/examples/asa/asa4.htm Chapter 4], [http://www.ats.ucla.edu/stat/sas/examples/asa/asa5.htm Chapter 5] and [http://www.ats.ucla.edu/stat/sas/examples/asa/asa6.htm Chapter 6] | ||

| + | |||

| + | ====R-Code Example==== | ||

| + | R example using the ''KMsurv'' survival package. | ||

| + | |||

| + | hmohiv<-read.table("http://www.ats.ucla.edu/stat/r/examples/asa/hmohiv.csv", sep=",", header = TRUE) | ||

| + | library(survival) | ||

| + | attach(hmohiv) | ||

| + | mini<-hmohiv[ID<=5,] | ||

| + | mini | ||

| + | |||

| + | > mini | ||

| + | ID time age drug censor entdate enddate | ||

| + | 1 1 5 46 0 1 5/15/1990 10/14/1990 | ||

| + | 2 2 6 35 1 0 9/19/1989 3/20/1990 | ||

| + | 3 3 8 30 1 1 4/21/1991 12/20/1991 | ||

| + | 4 4 3 30 1 1 1/3/1991 4/4/1991 | ||

| + | 5 5 22 36 0 1 9/18/1989 7/19/1991 | ||

| + | |||

| + | attach(mini) | ||

| + | mini.surv <- survfit(Surv(time, censor)~ 1, conf.type="none") | ||

| + | summary(mini.surv) | ||

| + | |||

| + | Call: survfit(formula = Surv(time, censor) ~ 1, conf.type = "none") | ||

| + | time n.risk n.event survival std.err | ||

| + | 3 5 1 0.8 0.179 | ||

| + | 5 4 1 0.6 0.219 | ||

| + | 8 2 1 0.3 0.239 | ||

| + | 22 1 1 0.0 NaN | ||

| + | |||

| + | plot(mini.surv, xlab="Time", ylab="Survival Probability") | ||

| + | detach(mini) | ||

| + | attach(hmohiv) | ||

| + | hmohiv.surv <- survfit( Surv(time, censor)~ 1, conf.type="none") | ||

| + | summary(hmohiv.surv) | ||

| + | plot (hmohiv.surv, xlab="Time", ylab="Survival Probability" ) | ||

| + | |||

| + | <center>[[Image:SMHS_SurvivalAnalysis_Fig7.png|500px]]</center> | ||

| + | |||

| + | ## Example using package KMsurv | ||

| + | library(KMsurv) | ||

| + | library(nlme) | ||

| + | t6m<-floor(time/6) | ||

| + | tall<-data.frame(t6m, censor) | ||

| + | die<-gsummary(tall, sum, groups=t6m) | ||

| + | total<-gsummary(tall, length, groups=t6m) | ||

| + | rm(t6m) | ||

| + | ltab.data<-cbind(die[,1:2], total[,2]) | ||

| + | detach(hmohiv) | ||

| + | attach(ltab.data) | ||

| + | |||

| + | lt=length(t6m) | ||

| + | t6m[lt+1]=NA | ||

| + | nevent=censor | ||

| + | nlost=total[,2] - censor | ||

| + | mytable<-lifetab(t6m, 100, nlost, nevent) | ||

| + | mytable[,1:5] | ||

| + | |||

| + | nsubs nlost nrisk nevent surv | ||

| + | 0-1 100 10 95.0 41 1.00000000 | ||

| + | 1-2 49 3 47.5 21 0.56842105 | ||

| + | 2-3 25 2 24.0 6 0.31711911 | ||

| + | 3-4 17 1 16.5 1 0.23783934 | ||

| + | 4-5 15 1 14.5 0 0.22342483 | ||

| + | 5-6 14 0 14.0 5 0.22342483 | ||

| + | 6-7 9 0 9.0 1 0.14363025 | ||

| + | 7-8 8 0 8.0 1 0.12767133 | ||

| + | 8-9 7 0 7.0 1 0.11171242 | ||

| + | 9-10 6 1 5.5 3 0.09575350 | ||

| + | 10-NA 2 2 1.0 0 0.04352432 | ||

| + | |||

| + | plot(t6m[1:3], mytable[,5], type="s", xlab="Survival time in every 6 month", ylab="Proportion Surviving") | ||

| + | plot(t6m[1:11], mytable[,5], type="b", xlab="Survival time in every 6 month", ylab="Proportion Surviving") | ||

| + | <center>[[Image:SMHS_SurvivalAnalysis_Fig8.png|500px]]</center> | ||

| + | |||

| + | ===Problems=== | ||

| + | * Time until death, $T$, has the following survival function $S(t)=e^{-λt}$. Show that the mean is greater than the median. | ||

| + | |||

| + | * Suppose that $T$ follows a [[AP_Statistics_Curriculum_2007_Uniform|Uniform distribution on (0,θ]]]. That is, the density can be written as $f(t)=\frac{1}{θ}$ for $t\in (0,θ]$ and 0 elsewhere. Determine whether or not the hazard function, $h(t)$, is a constant on the interval $t\in (0,θ]$. | ||

| + | |||

| + | * The following data are observed: | ||

| + | ** Compute $\hat{S}_{KM}(20)$. | ||

| + | ** Compute $\hat{Λ}(20)$. | ||

| + | ** An estimator of the variance $V(\hat{Λ}(t))$ is given by $\int_0^t{\frac{1}{Y^2(s)} dN(s)}$, where $Y(s)=\sum_{i=1}^n{Y_i(s)}$ and $dN(s)=\sum_{i=1}^n{dN_i(s)}$. Compute $V(\hat{Λ}(t))$. | ||

| + | |||

| + | <center> | ||

| + | {| class="wikitable" style="text-align:center;"border="1" | ||

| + | |- | ||

| + | ! i||1||2||3||4||5||6||7||8||9||10 | ||

| + | |- | ||

| + | | $X_i$||2||4||8||11||15||21||25||29||30||34 | ||

| + | |- | ||

| + | | $∆_i$||0||1||0||1||0||1||0||1||0||0 | ||

| + | |} | ||

| + | </center> | ||

| + | |||

| + | * Use these data (from [[SOCR_EduMaterials_AnalysisActivities_Survival |Example 1]] of the [http://www.socr.ucla.edu/htmls/ana/Survival_Analysis.html SOCR Survival Analysis Java Applet]) to replicate the results in R: | ||

| + | |||

| + | <center> | ||

| + | {| class="wikitable" style="text-align:center;"border="1" | ||

| + | |- | ||

| + | ! Time||Censor||Group | ||

| + | |- | ||

| + | | 6||1||6-MP | ||

| + | |- | ||

| + | | 6||1||6-MP | ||

| + | |- | ||

| + | | 6||1||6-MP | ||

| + | |- | ||

| + | | 6||0||6-MP | ||

| + | |- | ||

| + | | 7||1||6-MP | ||

| + | |- | ||

| + | | 9||0||6-MP | ||

| + | |- | ||

| + | | 10||1||6-MP | ||

| + | |- | ||

| + | | 10||0||6-MP | ||

| + | |- | ||

| + | | 11||0||6-MP | ||

| + | |- | ||

| + | | 13||1||6-MP | ||

| + | |- | ||

| + | | 16||1||6-MP | ||

| + | |- | ||

| + | | 17||0||6-MP | ||

| + | |- | ||

| + | | 19||0||6-MP | ||

| + | |- | ||

| + | | 20||0||6-MP | ||

| + | |- | ||

| + | | 22||1||6-MP | ||

| + | |- | ||

| + | | 23||1||6-MP | ||

| + | |- | ||

| + | | 25||0||6-MP | ||

| + | |- | ||

| + | | 32||0||6-MP | ||

| + | |- | ||

| + | | 32||0||6-MP | ||

| + | |- | ||

| + | | 34||0||6-MP | ||

| + | |- | ||

| + | | 35||0||6-MP | ||

| + | |- | ||

| + | | 1||1||control | ||

| + | |- | ||

| + | | 1||1||control | ||

| + | |- | ||

| + | | 2||1||control | ||

| + | |- | ||

| + | | 2||1||control | ||

| + | |- | ||

| + | | 3||1||control | ||

| + | |- | ||

| + | | 4||1||control | ||

| + | |- | ||

| + | | 4||1||control | ||

| + | |- | ||

| + | | 5||1||control | ||

| + | |- | ||

| + | | 5||1||control | ||

| + | |- | ||

| + | | 8||1||control | ||

| + | |- | ||

| + | | 8||1||control | ||

| + | |- | ||

| + | | 8||1||control | ||

| + | |- | ||

| + | | 8||1||control | ||

| + | |- | ||

| + | | 11||1||control | ||

| + | |- | ||

| + | | 11||1||control | ||

| + | |- | ||

| + | | 12||1||control | ||

| + | |- | ||

| + | | 12||1||control | ||

| + | |- | ||

| + | | 15||1||control | ||

| + | |- | ||

| + | | 17||1||control | ||

| + | |- | ||

| + | | 22||1||control | ||

| + | |- | ||

| + | | 23||1||control | ||

| + | |} | ||

| + | </center> | ||

| + | |||

| + | library(survival) | ||

| + | data <- read.table("<your_directory>/data.csv", sep=",", header = TRUE) | ||

| + | data | ||

| + | |||

| + | attach(data) | ||

| + | data.surv <- survfit(Surv(Time, Censor)~Group, conf.type="none") | ||

| + | summary(data.surv) | ||

| + | Call: survfit(formula = Surv(Time, Censor) ~ Group, conf.type = "none") | ||

| + | |||

| + | Group=6-MP | ||

| + | time n.risk n.event survival std.err | ||

| + | 6 21 3 0.857 0.0764 | ||

| + | 7 17 1 0.807 0.0869 | ||

| + | 10 15 1 0.753 0.0963 | ||

| + | 13 12 1 0.690 0.1068 | ||

| + | 16 11 1 0.627 0.1141 | ||

| + | 22 7 1 0.538 0.1282 | ||

| + | 23 6 1 0.448 0.1346 | ||

| + | |||

| + | Group=control | ||

| + | time n.risk n.event survival std.err | ||

| + | 1 21 2 0.9048 0.0641 | ||

| + | 2 19 2 0.8095 0.0857 | ||

| + | 3 17 1 0.7619 0.0929 | ||

| + | 4 16 2 0.6667 0.1029 | ||

| + | 5 14 2 0.5714 0.1080 | ||

| + | 8 12 4 0.3810 0.1060 | ||

| + | 11 8 2 0.2857 0.0986 | ||

| + | 12 6 2 0.1905 0.0857 | ||

| + | 15 4 1 0.1429 0.0764 | ||

| + | 17 3 1 0.0952 0.0641 | ||

| + | 22 2 1 0.0476 0.0465 | ||

| + | 23 1 1 0.0000 NaN | ||

| + | |||

| + | data.surv <- survfit(Surv(Time, Censor)~1, conf.type="none") | ||

| + | summary(data.surv) | ||

| + | |||

| + | Call: survfit(formula = Surv(Time, Censor) ~ 1, conf.type = "none") | ||

| + | |||

| + | time n.risk n.event survival std.err | ||

| + | 1 42 2 0.952 0.0329 | ||

| + | 2 40 2 0.905 0.0453 | ||

| + | 3 38 1 0.881 0.0500 | ||

| + | 4 37 2 0.833 0.0575 | ||

| + | 5 35 2 0.786 0.0633 | ||

| + | 6 33 3 0.714 0.0697 | ||

| + | 7 29 1 0.690 0.0715 | ||

| + | 8 28 4 0.591 0.0764 | ||

| + | 10 23 1 0.565 0.0773 | ||

| + | 11 21 2 0.512 0.0788 | ||

| + | 12 18 2 0.455 0.0796 | ||

| + | 13 16 1 0.426 0.0795 | ||

| + | 15 15 1 0.398 0.0791 | ||

| + | 16 14 1 0.369 0.0784 | ||

| + | 17 13 1 0.341 0.0774 | ||

| + | 22 9 2 0.265 0.0765 | ||

| + | 23 7 2 0.189 0.0710 | ||

| + | |||

| + | data.surv <- survfit(Surv(Time, Censor)~Group, conf.type="none") | ||

| + | plot(data.surv, xlab="Time", ylab="Survival Probability", lty=c(1,3)) | ||

| + | legend(25, 1.0, c("6-MP Group", "Controls") , lty=c(1,3) ) | ||

| + | |||

| + | <center>[[Image:SMHS_SurvivalAnalysis_Fig9.png|500px]]</center> | ||

| + | |||

| + | survdiff(Surv(Time, Censor) ~ Group, data=data, rho=0) | ||

| + | |||

| + | Call: | ||

| + | survdiff(formula = Surv(Time, Censor) ~ Group, data = data, rho = 0) | ||

| + | |||

| + | N Observed Expected (O-E)^2/E (O-E)^2/V | ||

| + | Group=6-MP 21 9 19.3 5.46 16.8 | ||

| + | Group=control 21 21 10.7 9.77 16.8 | ||

| + | |||

| + | Chisq= 16.8 on 1 degrees of freedom, p= 4.17e-05 | ||

| + | |||

| + | data.surv <- survfit(Surv(Time, Censor)~group, conf.type="log-log") | ||

| + | summary(data.surv) | ||

| + | |||

| + | Call: survfit(formula = Surv(Time, Censor) ~ 1, conf.type = "log-log") | ||

| + | |||

| + | time n.risk n.event survival std.err lower 95% CI upper 95% CI | ||

| + | 1 42 2 0.952 0.0329 0.8227 0.988 | ||

| + | 2 40 2 0.905 0.0453 0.7658 0.963 | ||

| + | 3 38 1 0.881 0.0500 0.7373 0.949 | ||

| + | 4 37 2 0.833 0.0575 0.6819 0.917 | ||

| + | 5 35 2 0.786 0.0633 0.6286 0.882 | ||

| + | 6 33 3 0.714 0.0697 0.5521 0.826 | ||

| + | 7 29 1 0.690 0.0715 0.5262 0.807 | ||

| + | 8 28 4 0.591 0.0764 0.4269 0.723 | ||

| + | 10 23 1 0.565 0.0773 0.4017 0.700 | ||

| + | 11 21 2 0.512 0.0788 0.3495 0.652 | ||

| + | 12 18 2 0.455 0.0796 0.2958 0.601 | ||

| + | 13 16 1 0.426 0.0795 0.2700 0.574 | ||

| + | 15 15 1 0.398 0.0791 0.2449 0.547 | ||

| + | 16 14 1 0.369 0.0784 0.2204 0.519 | ||

| + | 17 13 1 0.341 0.0774 0.1966 0.491 | ||

| + | 22 9 2 0.265 0.0765 0.1311 0.420 | ||

| + | 23 7 2 0.189 0.0710 0.0753 0.343 | ||

| + | |||

| + | |||

| + | * Suppose that time of death due to cancer is denoted as $T_{i1}$, is exponentially distributed with hazard $h_{i1}(t)=0.02$. In addition, time of death due to non-cancer is denoted by $T_{i2}$, has hazard function $h_{i2}(t)=0.03$. For subject $i$, it is assumed that $T_{i1}$ and $T_{i2}$ act independently. Note that $t$ is measured in years. Compute the probability that a subject survives at least 10 years. | ||

| + | ===References=== | ||

| − | + | * [http://en.wikipedia.org/wiki/Survival_analysis Survival Analysis Wikipedia] | |

| − | http:// | + | * [http://en.wikipedia.org/wiki/Hazard_ratio Hazard Ratio Wikipedia] |

| − | http:// | ||

| − | |||

<hr> | <hr> | ||

Latest revision as of 13:50, 18 November 2022

Contents

- 1 Scientific Methods for Health Sciences - Survival Analysis

- 1.1 Overview

- 1.2 Motivation

- 1.3 Theory

- 1.3.1 Survival function

- 1.3.2 Hazard Ratios

- 1.3.3 The LogRank and Mantel-Haenszel methods for computing hazard ratios

- 1.3.4 Survival analysis goals

- 1.3.5 Terminology

- 1.3.6 Survival distributions

- 1.3.7 Examples

- 1.3.8 Common density functions describing survival probability

- 1.3.9 Mathematical formulation/relations

- 1.3.10 Hazard function models

- 1.3.11 Example

- 1.4 Applications

- 1.5 Software

- 1.6 Problems

- 1.7 References

Scientific Methods for Health Sciences - Survival Analysis

Overview

Survival analysis is statistical methods for analyzing longitudinal data on the occurrence of events. Events may include death, injury, onset of illness, recovery from illness (binary variables) or transition above or below the clinical threshold of a meaningful continuous variable (e.g. CD4 counts). Typically, survival analysis accommodates data from randomized clinical trials or cohort study designs. In this section, we will present a general introduction to survival analysis including terminology and data structure, survival/hazard functions, parametric versus semi-parametric regression techniques and introduction to (non-parametric) Kaplan-Meier methods. Code examples are also included showing the applications survival analysis in practical studies.

Motivation

Studies in the field of public health often involve questions like “What is the proportion of a population which will survive past time t?” “What is the expected rate of death in study participants, or the population”? “How do particular circumstances increase or decrease the probability of survival?” To answer questions like this, we would need to define the term of lifetime and model the time to event data. In cases like this, death or failure is considered as an event in survival analysis of time duration to until one or more events happen.

Theory

Risk is the expected value of an undesirable outcome. To estimate the risk, we combine (weight-average) the probabilities of various possible events (outcomes) and some assessment of the corresponding harm into a single value, the risk. For a simple binary possibility of accident vs. no-accident situation, the Risk = (probability of the accident occurring) \(\times \) (expected loss in case of the accident). For instance, probability of accident for certain sport activity may be equal to \(0.001\), which would be associated with a loss of \(\$ 10,000\) healthcare costs, then total risk of playing the sport is a loss of \(0.001\times \$10,000=\$10\).

In general, when there are multiple types of negative outcomes (e.g., types of potential injuries), the total risk is the weighted-average of all different accidents (outcomes): $$Risk = \sum_{\text{For all accidents}} {(\text{probability of the accident occurring}) \times (\text{expected loss in case of the accident})}.$$

For example, in certain clinical decision making, treatment X may lead to an adverse event A with probability of \(0.01\), which is associated with a (monetary) loss of \(\$1,000\), and a different probability of \(0.000001\) of an adverse event of type B, associated with a loss of \(\$200,000\), then the total risk (loss expectancy) would be: $$Risk= (0.01\times \$1,000) + (0.000001\times \$200,000) = \$10.2.$$

Survival function

The survival function, is defined as \(S(t) = P(T > t), \) where \(t\) is time, \(T\) is a random variable denoting the time of event (e.g., death), and \(P\) is the probability function. Hence, the survival function is the probability that the time of event is bigger than some specified time \(t\). It is common to assume \(S(0) = 1\), as the possibility of immediate death is unlikely, most of the time. If \(u \ge t\), then knowing that \(T > u\) yields \(T > t\), e.g., the survival function is decreasing. Thus, \(S(t) \ge S(u)\) for all \(u \ge t\) -- for all subjects, survival to a later age is only possible if subjects have survived all previous younger ages. The survival function usually tends to zero as \(T\) (age) increases.

Hazard Ratios

Hazard is the slope of the survival function, which measures of how rapidly critical events occur (e.g., subjects are dying).

- The hazard ratio compares two treatments. If the hazard ratio is 2.0, then the rate of deaths in one treatment group is twice the rate in the other group.

- The hazard ratio is not computed at any one time point, but is computed from all the data in the survival curve.

- Since there is only one hazard ratio reported, it can only be interpreted if you assume that the population hazard ratio is consistent over time, and that any differences are due to random sampling. This is called the assumption of proportional hazards.

- If the hazard ratio is not consistent over time, the value reported for the hazard ratio may not be useful. If two survival curves cross, the hazard ratios are certainly not consistent (unless they cross at late time points, when there are few subjects still being followed so there is a lot of uncertainty in the true position of the survival curves).

- The hazard ratio is not directly related to the ratio of median survival times. A hazard ratio of 2.0 does not mean that the median survival time is doubled (or halved). A hazard ratio of 2.0 means a patient in one treatment group who has not died (or progressed, or whatever end point is tracked) at a certain time point has twice the probability of having died (or progressed...) by the next time point compared to a patient in the other treatment group.

- Hazard ratios, and their confidence intervals, may be computed using two methods (see below LogRank and Mantel-Haenszel), each reporting both the hazard ratio and its reciprocal. If people in group A die at twice the rate of people in group B (HR=2.0), then people in group B die at half the rate of people in group A (HR=0.5).

The LogRank and Mantel-Haenszel methods for computing hazard ratios

Both, the LogRank and Mantel-Haenszel methods usually give nearly identical results, unless in situations when several subjects die at the same time or when the hazard ratio is far from 1.0.

The Mantel-Haenszel method

The Mantel-Haenszel method reports hazard ratios that are further from 1.0 (so the reported hazard ratio is too large when the hazard ratio is greater than 1.0, and too small when the hazard ratio is less than 1.0):

- Compute the total variance, V;

- Compute \(K = \frac{O1 - E1}{V}\), where \(O1\) - is the total observed number of events in group1, \(E1\) is the total expected number of events in group1. You'd get the same value of \(K\) if you used the other group;

- The hazard ratio equals \(e^K\);

- The 95% confidence interval of the hazard ratio is: (\(e^{K - \frac{1.96}{\sqrt{V}}}$, $e^{K + \frac{1.96}{\sqrt{V}}}\)).

The LogRank method

The LogRank method is also referred to as O/E method and reports values that are closer to 1.0 than the true Hazard Ratio, especially when the hazard ratio is large or the sample size is large. When there are ties, both methods are less accurate. The logrank methods tend to report hazard ratios that are even closer to 1.0 (so the reported hazard ratio is too small when the hazard ratio is greater than 1.0, and too large when the hazard ratio is less than 1.0):

- As part of the Kaplan-Meier calculations, compute the number of observed events (deaths, usually) in each group ($O_a$ and $O_b$), and the number of expected events assuming a null hypothesis of no difference in survival (\(E_a\) and \(E_b\));

- The hazard ratio then is: \(HR= \frac{\frac{O_a}{E_a}}{\frac{O_b}{E_b}}\);

- The standard error of the natural logarithm of the hazard ratio is: \(\ln{HR}=\sqrt{\frac{1}{E_a} + \frac{1}{E_b}}\);

- The lower and upper limits of the 95% confidence interval of the hazard ratio are: \(e^{\big\{ \ln(O_a)-\ln(E_a) \pm 1.96 \sqrt{\frac{1}{E_a} + \frac{1}{E_b}}\big\}}\). Recall that \(HR=\frac{O_a E_b}{O_b E_a}\), and under the null hypothesis \(E_a=E_b\), so \(\ln(HR)= \ln(O_a)-\ln(E_a).\)

Survival analysis goals

- Estimate time-to-event for a group of individuals, such as time until second heart-attack for a group of Myocardial Infarction (MI) patients;

- To compare time-to-event between two or more groups, such as treated vs. placebo MI patients in a randomized controlled trial;

- To assess the relationship of co-variables to time-to-event, such as: does weight, insulin resistance, or cholesterol influence survival time of MI patients?

Terminology

- Time-to-event: The time from entry into a study until a subject has a particular outcome.

- Censoring: Subjects are said to be censored if they are lost to follow up or drop out of the study, or if the study ends before they die or have another outcome of interest. They are (censored) counted as alive or disease-free for the time they were enrolled in the study. If dropout is related to both outcome and treatment, dropouts may bias the results. At any given time, the variable censor indicates whether the subject continues with the study (censor=1), but typically, each $c_i$ is paired with a failure time $T_i$ indicating the patient died (event occurred). If a patient is lost to follow up, $c_i=0$, indicating the patient had no record at time $T_i$, i.e., a right-censored failure time.

- Two-variable outcome: time variable: $t_i$ = time of last disease-free observation or time at event; censoring variable: $c_i=1$ if had the event; $c_i =0$ no event by time $t_i$.

- Right Censoring ($T>t$): Common examples: termination of the study; death due to a cause that is not the event of interest; loss to follow-up. We know that subject survived at least to time $t$.

- Example: Suppose subject 1 is enrolled at day 55 and dies on day 76, subject 2 enrolls at day 87 and dies at day 102, subject 3 enrolls at day 75 but is lost (censored) by day 81, and subject 4 enrolls in day 99 and dies at day 111. The Figure below shows these data graphically. Note of varying start times. This figure is generated using the SOCR Y Interval Chart.

- The next Figure shows a plot of every subject's time since their baseline time collection (right censoring).

Survival distributions

- $T_i$, the event time for an individual, is a random variable having certain probability distribution;

- Different models for survival data are distinguished by different choice of distribution for the variable $T_i$;

- Parametric survival analysis is based on Waiting Time distributions (e.g., exponential probability distribution);

- Assume that times-to-event for individuals in a dataset follow a continuous probability distribution (for which we may have an analytic mathematical representation or not). For all possible times $T_i$ after baseline, there is a certain probability that an individual will have an event at exactly time $T_i$. For example, human beings have a certain probability of dying at ages 1, 26, 86, 100, denoted by $P(T=1)$, $P(T=26)$, $P(T=86)$, $P(T=100)$, respectively, and these probabilities are obviously vastly different from one another;

- Probability density function f(t): In the case of human longevity, $T_i$ is unlikely to follow a unimodal normal distribution, because the probability of death is not highest in the middle ages, but at the beginning and end of life (bimodal?). The probability of the failure time occurring at exactly time $t$ (out of the whole range of possible $t$’s) is: $f(t)=\lim_{∆t→0}\frac{P(t≤T<t+∆t)}{∆t}$.

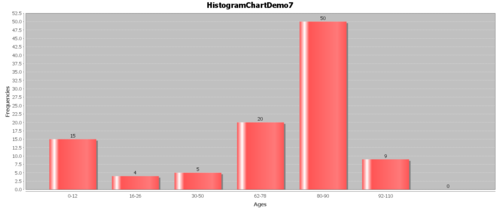

- Example: Suppose we have the following hypothetical data (Figure below). People have a high chance of dying in their 70’s and 80’s, but they have a smaller chance of dying in their 90’s and 100’s, because few people make it long enough to die at these ages.

| Ages | Frequencies | CDF(age) | S(age) |

|---|---|---|---|

| 0-12 | 15 | 0.145631068 | 0.854368932 |

| 16-26 | 4 | 0.184466019 | 0.815533981 |

| 30-50 | 5 | 0.233009709 | 0.766990291 |

| 62-78 | 20 | 0.427184466 | 0.572815534 |

| 80-90 | 50 | 0.912621359 | 0.087378641 |

| 92-110 | 9 | 1 | 0 |

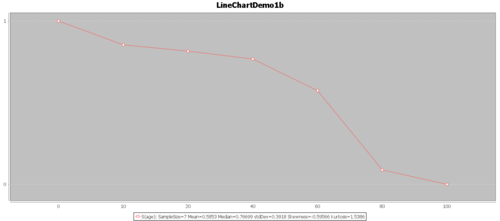

- The Survival function, $S(t)=1-F(t)$: The goal of survival analysis is to estimate and compare survival experiences of different groups. Survival experience is described by the cumulative survival function: $S(t)=1-P(T≤t)=1-F(t)$, where $F(t)$ is the CDF of $f(t)$. The Figure below shows the cumulative survival for the same hypothetical data, plotted as cumulative distribution rather than density.

Try to use real-data and the SOCR Survival Analysis and the SOCR Survival Java Applet to generate a similar plot.

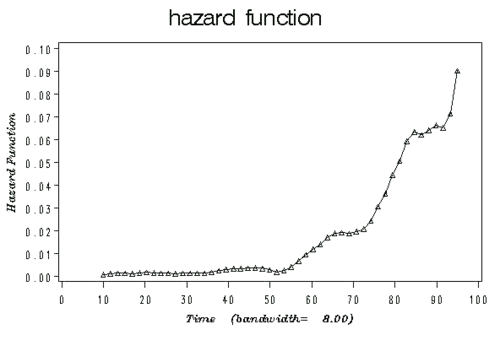

- Hazard function represents the probability that if you survive to $t$, you will succumb to the event in the next instant.

$$h(t)=\lim_{∆t→0} \frac{P(t≤T<t+∆t|T≥t)}{∆t}.$$

- The Hazard function may also be expressed in terms of density and survival functions: $h(t)=\frac{f(t)}{S(t)}$. This is because of the Bayesian rule:

$$h(t)dt = P(t≤T<t+dt|T≥t)=\frac{P(t≤T<t+dt \cap T≥t)}{P(T≥t)}=$$ $$\frac{P(t≤T<t+dt)}{P(T≥t)} =\frac{f(t)dt}{S(t)}.$$

- The Figure below illustrates an example plot of a hazard function depicting the hazard rate as an instantaneous incidence rate.

Examples

- For uncensored data, the following failure time are observed for n=10 subjects. For convenience, the failure times have been ordered. Calculate $\hat{S}(20)$.

| i | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| $T_i$ | 2 | 5 | 8 | 12 | 15 | 21 | 25 | 29 | 30 | 34 |

- $\hat{S}(t)=\frac{1}{n}\sum_{i=1}^n{I(T_i>t)}$, where the indicator (or characteristic) function $I(A)$ is defined by:

$$I(A)= \begin{cases} 1, & \text{A is true} \\ 0, & \text{if A is false} \end{cases}.$$ Thus, $\hat{S}(20)= P(T>20) = 1-P(T\leq 20) \equiv \frac{1}{10} (0+0+0+0+0+1+1+1+1+1)$. $$\hat{S}(20)=0.5.$$

- For censored data ($c_i$ represents the censoring indicator), suppose the observed data were:

| i | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| $T_i$ | 2 | 5 | 8 | 12 | 15 | 21 | 25 | 29 | 30 | 34 |

| $c_i$ | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 |

$$\hat{h}(2)=\frac{P(2\leq T < 3|T\geq 2)}{1} =\frac{P(2\leq T < 3)}{P(T\geq 2)}=\frac{1/10}{1}=0.1,$$ $$\hat{h}(8)=\frac{P(8\leq T < 9|T\geq 8)}{1} =\frac{P(8\leq T < 9)}{P(T\geq 8)}=\frac{1/10}{8/10}= 0.125,$$ $$\hat{h}(15)=\frac{P(15\leq T < 16|T\geq 15)}{1} =\frac{P(15\leq T < 16)}{P(T\geq 15)}=\frac{1/10}{6/10}=0.166.$$

- Cumulative Hazard function is defined as $Λ(t)=\int_0^t{h(u)du}$. There is an important connection between cumulative hazard and survival: $S(t)=e^{-Λ(t)}$, although, the cumulative hazard function may be hard to interpret.

- Properties:

- (1) $Λ(t)≥0$;

- (2) $\lim_{t→∞}{Λ(t)}=\infty$;

- (3) $Λ(0)=0$.

- Hazard vs. density example: at birth, each person has a certain probability of dying at any age; that’s the probability density (cf. marginal probability). For example: a girl born today may have a 2% chance of dying at the age of 80 years. However, if a person survives for a while, the probabilities of prospective survival change (cf. conditional probability). For example, a woman who is 79 today may have a 6% chance of dying at the age of 80. The figure bellow gives a set of possible probability density, failure, survival and hazard function. $F(t)$=cumulative failure=$1-e^{-t^{1.7}}$; $f(t)$=density function=$1.7 t^{0.7} e^{-t^{1.7}}$; $S(t)$=cumulative survival=$e^{-t^{1.7}}$; $h(t)$=hazard function=$1.7t^{0.7}$.

# R plotting example op <- par(mfrow=c(2,2)) ## configure the 2x2 plotting canvas plot(x, 1.7*x^(0.7)* exp(-x*1.7), xlab = "x-values", ylab = "f(x)", type = "l", main="Probability Density") plot(x, 1- exp(-x*1.7), xlab = "x-values", ylab = "F(x)", type = "l", main="Cumulative Distribution") plot(x, exp(-x*1.7), xlab = "x-values", ylab = "S(x)", type = "l", main="Survival") plot(x, 1.7*x^(0.7), xlab = "x-values", ylab = "h(x)", type = "l", main="Hazard")

Common density functions describing survival probability

- Exponential (hazard is constant over time, simplest model!): constant hazard function $h(t)=h$; exponential density functions: $P(T=t)=f(t)=he^{-ht}$; survival function $P(T>t)=S(t)=\int_t^{\infty} {he^{-hu} du}=-e^{-hu} |_t^{\infty}=e^{-ht}$.

- Example: $h(t)=0.1$ cases/person-year; Then

- the probability of developing disease at year 10 is: $P(t=10)=0.01e^{-0.1*10}=0.01e^{-0.1}=0.009$;

- the probability of surviving past year 10 is: $S(t)=e^{-0.01t}=90.5%$;

- the cumulative risk through year 10 is 9.5% (complement of $P(T>10)$).

- Weibull (hazard function is increasing or decreasing over time)

Mathematical formulation/relations

- Hazard from density and survival: $h(t)=\frac{f(t)}{S(t)}$

- Survival from density: $S(t)=\int_t^{\infty} {f(u)du}$

- Density from survival: $f(t)=-\frac{dS(t)}{dt}=S(t)h(t)$

- Density from hazard: $f(t)=h(t) e^{-\int_0^t {h(u)du}}$

- Survival from hazard: $S(t)=e^{-\int_0^t {h(u)du}}$

- Hazard from survival: $h(t)=-\frac{d}{dt} \ln{S(t)}$

- Cumulative hazard from survival: $Λ(t)=-\log{S(t)}$

- Life expectancy (mean survival time): $E[T]=\int_0^{\infty} {tf(t)dt}=\int_0^{\infty} {S(t)}$, which is the area under the survival curve

- Restricted mean lifetime: $E[T∧L]=\int_0^L {S(t)dt}$

- Mean residual lifetime: $m(t_0)=E[T-t_0|T>t_0]=\int_{t_0}^{\infty}{\frac{(t-t_0)f(t)dt}{S(t_0)}}=\int_{t_0}^{\infty} {\frac{S(t)dt}{S(t_0)}}$, set $t_0=0$ to obtain E[T].

Hazard function models

- Parametric multivariate regression techniques: model the underlying hazard/survival function; assume that the dependent variable (time-to-event) takes on some known distribution, such as Weibull, exponential, or lognormal; estimates parameters of these distributions (e.g., baseline hazard function); estimates covariate-adjusted hazard ratios (a hazard ratio is a ratio of hazard rates); many times we care more about comparing groups than about estimating absolute survival. The model of parametric regression: components include a baseline hazard function (which may change over time) and a linear function of a set of k fixed covariates that when exponentiated gives the relative risk.

- Exponential model assumes fixed baseline hazard that we can estimate: with exponential distribution, $S(t)=λ e^{-λt}$, model applied: $\log{h_i(t)}=μ+β_1 x_{i1}+⋯+β_k x_{ik}$;

- Weibull model models the baseline hazard as a function of time: two parameters of shape ($\gamma$) and scale ($λ$) must be estimated to describe the underlying hazard function over time. With Weibull distribution: $S(t)=e^{-λt^{\gamma}}$, model applied: $\log {h_i(t)}= μ+α\log{t}+β_1 x_{i1}+⋯+β_k x_{ik}$;

- Cox regression (semi-parametric): Cox models the effect of predictors and covariates on the hazard rate but leaves the baseline hazard rate unspecified; also called proportional hazards regression; does not assume knowledge of absolute risk; estimates relative rather than absolute risk.

- Components: a baseline hazard function that is left unspecified but must be positive (equal to the hazard when all covariates are 0); a linear function of a set of $k$ fixed covariates that is exponentiated (equal to the relative risk).

- $\log{h_i(t)}= \log{h_0 (t)}+β_1 x_{i1}+⋯+β_k x_{ik}$; $h_i (t)=h_0(t) e^{β_1 x_{i1}+⋯+β_k x_{ik}}$.

- The point is to compare the hazard rates of individuals who have different covariates: hence, called Proportional hazards: $HR=\frac{h_1 (t)}{h_2 (t)}=\frac{h_0 (t) e^{βx_1 }}{h_0 (t) e^{βx_2}}=e^{β(x_1-x_2)}$, hazard functions should be strictly parallel.

- Kaplan-Meier Estimates (non-parametric estimate of the survival function): no a priori math assumptions (either about the underlying hazard function or about proportional hazards); simply, the empirical probability of surviving past certain times in the sample (taking into account censoring); non-parametric estimate of the survival function; commonly used to describe survivorship of study population/s; commonly used to compare two study populations; intuitive graphical presentation.

- Limit of Kaplan-Meier: this approach is mainly descriptive, doesn’t control for covariates, requires categorical predictors and can’t accommodate time-dependent variables.

- With Kaplan-Meier:

- For uncensored data: $\hat{S}(t)=\frac{1}{n}\sum_{i=1}^n{I(T_i>t)}$, where $I(A)=1$ if $A$ is true and 0 otherwise.

- For censored data, to estimate $\hat{S}(t)$ we use product rule from probability theory: $P(A\cap B)=P(A)*P(B)$ if $A$ and $B$ are independent. In survival analysis, intervals are defined by failures. e.g., $P(\text{surviving intervals 1 and 2})=$ $P(\text{surviving interval 1})\times P(\text{surviving interval 2 } | \text{ survived interval 1})$. For instance,

| i | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| $T_i$ | 4 | 6 | 7 | 12 | 12 |

| $c_i$ | 1 | 0 | 1 | 0 | 0 |

The second subject was lost to follow up, and cases 4 and 5 were alive after 12 months. The remaining 2 cases (1 and 3) died at times 4 and 7 months, respectively. To estimate the likelihood of a patient to survive 1 year (12 months) we break the 12 months into intervals anchored at points of dying (in this case 4 and 7 months): $$ S(12) = P(\text{surviving intervals [0,4] and (4,7] and and (7,12]})=$$ $$P(T>4)\times P(T>7|T>4)\times P(T>12|T>7)=$$ $P(\text{surviving interval [0,4]})\times$ $P(\text{surviving interval (4,7]}|\text{survived interval [0,4]})$ $\times P(\text{surviving interval (7,12]}|\text{survived interval [0,7]})$

- Note the single censored case at time 6 months! $P(\text{surviving intervals [0,4] and (4,7] and (7,12]})= 4/5 \times 2/3 \times 2/2= 0.53$, as 4 of the five cases (2, 3, 4,5) make it beyond time 4; 2 out of the 3 cases (2, 4 and 5) that survived the 4th month survived to the 7th month, and 2 out of the 2 cases (4 and 5) known to have survived beyond the 7th month lived past 12th month. Thus, $ S(12)=0.53$ which is between the probability of surviving 12 months = 40% (we end with 2 survivors at the end) and 60% (3/5) (only 2 are known to have died during the year).

Example

| i | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| $T_i$ | 2 | 5 | 8 | 12 | 15 | 21 | 25 | 29 | 30 | 34 |

| $c_i$ | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 |

- To estimate $S(10)$: $S(10)=P(T>10)=P(T>10,T>8,T>5,T>2)=$ $P(T>10│T>8)P(T>8│T>5)P(T>5│T>2)P(T>2)=$ $7/7 * 7/8 * 9/9 * 9/10=0.7875$. So, $\hat{S}_{KM}(10)=0.7875$.

- To estimate $S(20)$: $S(20)=P(T>20)=P(T>20,T>15,T>10,T>8,T>5,T>2)=$ $P(T>20│T>15)P(T>15│T>10)P(T>10│T>8)P(T>8│T>5)P(T>5│T>2)P(T>2)=$ $6/6 * 5/7 * 7/7 * 7/8 * 9/9 * 9/10=0.5625$. So, $\hat{S}_{KM}(20)=0.5625$.

Applications

- The SOCR Survival Activity presents an example on survival analysis using the Kaplan-Meyer Method. This example is based on a dataset from “Modern applied statistics with S”, which contains two types of treatment groups denoted by 6-MP and control.

- This study investigated a non-response problem in survival analysis where the occurrence of missing data in the risk factor is related to mortality. The available data in the study suggest that the process that created the missing data depends jointly on survival and the unknown blood pressure, thereby distorting the relation of interest. Multiple imputations are used to impute missing blood pressure and then analyze the data under a variety of non-response models. One special modeling problem is treated in detail; the construction of a predictive model for drawing imputations if the number of variables is large. Risk estimates for these data appear robust to even large departures from the simplest non-response model, and are similar to those derived under deletion of the incomplete records.

Software

- SOCR Tools: Modeler, SUrvival Analysis Java Applet

- UCLA R-Survival R code example: Chapter 2, Chapter 4, Chapter 5 and Chapter 6

R-Code Example

R example using the KMsurv survival package.

hmohiv<-read.table("http://www.ats.ucla.edu/stat/r/examples/asa/hmohiv.csv", sep=",", header = TRUE)

library(survival)

attach(hmohiv)

mini<-hmohiv[ID<=5,]

mini

> mini ID time age drug censor entdate enddate 1 1 5 46 0 1 5/15/1990 10/14/1990 2 2 6 35 1 0 9/19/1989 3/20/1990 3 3 8 30 1 1 4/21/1991 12/20/1991 4 4 3 30 1 1 1/3/1991 4/4/1991 5 5 22 36 0 1 9/18/1989 7/19/1991

attach(mini) mini.surv <- survfit(Surv(time, censor)~ 1, conf.type="none") summary(mini.surv)

Call: survfit(formula = Surv(time, censor) ~ 1, conf.type = "none") time n.risk n.event survival std.err 3 5 1 0.8 0.179 5 4 1 0.6 0.219 8 2 1 0.3 0.239 22 1 1 0.0 NaN

plot(mini.surv, xlab="Time", ylab="Survival Probability") detach(mini) attach(hmohiv) hmohiv.surv <- survfit( Surv(time, censor)~ 1, conf.type="none") summary(hmohiv.surv) plot (hmohiv.surv, xlab="Time", ylab="Survival Probability" )

## Example using package KMsurv library(KMsurv) library(nlme) t6m<-floor(time/6) tall<-data.frame(t6m, censor) die<-gsummary(tall, sum, groups=t6m) total<-gsummary(tall, length, groups=t6m) rm(t6m) ltab.data<-cbind(die[,1:2], total[,2]) detach(hmohiv) attach(ltab.data) lt=length(t6m) t6m[lt+1]=NA nevent=censor nlost=total[,2] - censor mytable<-lifetab(t6m, 100, nlost, nevent) mytable[,1:5]

nsubs nlost nrisk nevent surv 0-1 100 10 95.0 41 1.00000000 1-2 49 3 47.5 21 0.56842105 2-3 25 2 24.0 6 0.31711911 3-4 17 1 16.5 1 0.23783934 4-5 15 1 14.5 0 0.22342483 5-6 14 0 14.0 5 0.22342483 6-7 9 0 9.0 1 0.14363025 7-8 8 0 8.0 1 0.12767133 8-9 7 0 7.0 1 0.11171242 9-10 6 1 5.5 3 0.09575350 10-NA 2 2 1.0 0 0.04352432

plot(t6m[1:3], mytable[,5], type="s", xlab="Survival time in every 6 month", ylab="Proportion Surviving") plot(t6m[1:11], mytable[,5], type="b", xlab="Survival time in every 6 month", ylab="Proportion Surviving")

Problems

- Time until death, $T$, has the following survival function $S(t)=e^{-λt}$. Show that the mean is greater than the median.

- Suppose that $T$ follows a Uniform distribution on (0,θ]. That is, the density can be written as $f(t)=\frac{1}{θ}$ for $t\in (0,θ]$ and 0 elsewhere. Determine whether or not the hazard function, $h(t)$, is a constant on the interval $t\in (0,θ]$.

- The following data are observed:

- Compute $\hat{S}_{KM}(20)$.

- Compute $\hat{Λ}(20)$.

- An estimator of the variance $V(\hat{Λ}(t))$ is given by $\int_0^t{\frac{1}{Y^2(s)} dN(s)}$, where $Y(s)=\sum_{i=1}^n{Y_i(s)}$ and $dN(s)=\sum_{i=1}^n{dN_i(s)}$. Compute $V(\hat{Λ}(t))$.

| i | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| $X_i$ | 2 | 4 | 8 | 11 | 15 | 21 | 25 | 29 | 30 | 34 |

| $∆_i$ | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 0 |

- Use these data (from Example 1 of the SOCR Survival Analysis Java Applet) to replicate the results in R:

| Time | Censor | Group |

|---|---|---|

| 6 | 1 | 6-MP |

| 6 | 1 | 6-MP |

| 6 | 1 | 6-MP |

| 6 | 0 | 6-MP |

| 7 | 1 | 6-MP |

| 9 | 0 | 6-MP |

| 10 | 1 | 6-MP |

| 10 | 0 | 6-MP |

| 11 | 0 | 6-MP |

| 13 | 1 | 6-MP |

| 16 | 1 | 6-MP |

| 17 | 0 | 6-MP |

| 19 | 0 | 6-MP |

| 20 | 0 | 6-MP |

| 22 | 1 | 6-MP |

| 23 | 1 | 6-MP |

| 25 | 0 | 6-MP |

| 32 | 0 | 6-MP |

| 32 | 0 | 6-MP |

| 34 | 0 | 6-MP |

| 35 | 0 | 6-MP |

| 1 | 1 | control |

| 1 | 1 | control |

| 2 | 1 | control |

| 2 | 1 | control |

| 3 | 1 | control |

| 4 | 1 | control |

| 4 | 1 | control |

| 5 | 1 | control |

| 5 | 1 | control |

| 8 | 1 | control |

| 8 | 1 | control |

| 8 | 1 | control |

| 8 | 1 | control |

| 11 | 1 | control |

| 11 | 1 | control |

| 12 | 1 | control |

| 12 | 1 | control |

| 15 | 1 | control |

| 17 | 1 | control |

| 22 | 1 | control |

| 23 | 1 | control |

library(survival)

data <- read.table("<your_directory>/data.csv", sep=",", header = TRUE)

data

attach(data) data.surv <- survfit(Surv(Time, Censor)~Group, conf.type="none") summary(data.surv) Call: survfit(formula = Surv(Time, Censor) ~ Group, conf.type = "none")

Group=6-MP time n.risk n.event survival std.err 6 21 3 0.857 0.0764 7 17 1 0.807 0.0869 10 15 1 0.753 0.0963 13 12 1 0.690 0.1068 16 11 1 0.627 0.1141 22 7 1 0.538 0.1282 23 6 1 0.448 0.1346

Group=control time n.risk n.event survival std.err 1 21 2 0.9048 0.0641 2 19 2 0.8095 0.0857 3 17 1 0.7619 0.0929 4 16 2 0.6667 0.1029 5 14 2 0.5714 0.1080 8 12 4 0.3810 0.1060 11 8 2 0.2857 0.0986 12 6 2 0.1905 0.0857 15 4 1 0.1429 0.0764 17 3 1 0.0952 0.0641 22 2 1 0.0476 0.0465 23 1 1 0.0000 NaN

data.surv <- survfit(Surv(Time, Censor)~1, conf.type="none") summary(data.surv)

Call: survfit(formula = Surv(Time, Censor) ~ 1, conf.type = "none") time n.risk n.event survival std.err 1 42 2 0.952 0.0329 2 40 2 0.905 0.0453 3 38 1 0.881 0.0500 4 37 2 0.833 0.0575 5 35 2 0.786 0.0633 6 33 3 0.714 0.0697 7 29 1 0.690 0.0715 8 28 4 0.591 0.0764 10 23 1 0.565 0.0773 11 21 2 0.512 0.0788 12 18 2 0.455 0.0796 13 16 1 0.426 0.0795 15 15 1 0.398 0.0791 16 14 1 0.369 0.0784 17 13 1 0.341 0.0774 22 9 2 0.265 0.0765 23 7 2 0.189 0.0710

data.surv <- survfit(Surv(Time, Censor)~Group, conf.type="none")

plot(data.surv, xlab="Time", ylab="Survival Probability", lty=c(1,3))

legend(25, 1.0, c("6-MP Group", "Controls") , lty=c(1,3) )

survdiff(Surv(Time, Censor) ~ Group, data=data, rho=0)

Call: survdiff(formula = Surv(Time, Censor) ~ Group, data = data, rho = 0)

N Observed Expected (O-E)^2/E (O-E)^2/V Group=6-MP 21 9 19.3 5.46 16.8 Group=control 21 21 10.7 9.77 16.8

Chisq= 16.8 on 1 degrees of freedom, p= 4.17e-05

data.surv <- survfit(Surv(Time, Censor)~group, conf.type="log-log") summary(data.surv)

Call: survfit(formula = Surv(Time, Censor) ~ 1, conf.type = "log-log")

time n.risk n.event survival std.err lower 95% CI upper 95% CI 1 42 2 0.952 0.0329 0.8227 0.988 2 40 2 0.905 0.0453 0.7658 0.963 3 38 1 0.881 0.0500 0.7373 0.949 4 37 2 0.833 0.0575 0.6819 0.917 5 35 2 0.786 0.0633 0.6286 0.882 6 33 3 0.714 0.0697 0.5521 0.826 7 29 1 0.690 0.0715 0.5262 0.807 8 28 4 0.591 0.0764 0.4269 0.723 10 23 1 0.565 0.0773 0.4017 0.700 11 21 2 0.512 0.0788 0.3495 0.652 12 18 2 0.455 0.0796 0.2958 0.601 13 16 1 0.426 0.0795 0.2700 0.574 15 15 1 0.398 0.0791 0.2449 0.547 16 14 1 0.369 0.0784 0.2204 0.519 17 13 1 0.341 0.0774 0.1966 0.491 22 9 2 0.265 0.0765 0.1311 0.420 23 7 2 0.189 0.0710 0.0753 0.343

- Suppose that time of death due to cancer is denoted as $T_{i1}$, is exponentially distributed with hazard $h_{i1}(t)=0.02$. In addition, time of death due to non-cancer is denoted by $T_{i2}$, has hazard function $h_{i2}(t)=0.03$. For subject $i$, it is assumed that $T_{i1}$ and $T_{i2}$ act independently. Note that $t$ is measured in years. Compute the probability that a subject survives at least 10 years.

References

- SOCR Home page: http://www.socr.umich.edu

Translate this page: