Difference between revisions of "AP Statistics Curriculum 2007 GLM Corr"

(→Computing <math>\rho=R(X,Y)</math>) |

|||

| Line 47: | Line 47: | ||

* Note: The correlation is defined only if both of the standard deviations are finite and both of them are nonzero. It is a corollary of the [http://en.wikipedia.org/wiki/Cauchy-Schwarz_inequality Cauchy-Schwarz inequality] that the correlation is always bound <math>-1 \leq \rho \leq 1</math>. | * Note: The correlation is defined only if both of the standard deviations are finite and both of them are nonzero. It is a corollary of the [http://en.wikipedia.org/wiki/Cauchy-Schwarz_inequality Cauchy-Schwarz inequality] that the correlation is always bound <math>-1 \leq \rho \leq 1</math>. | ||

| − | + | ===Examples=== | |

| − | |||

| − | === | ||

| − | |||

| − | |||

| − | |||

| − | === | + | ====Human weight and height==== |

| − | + | Suppose we took only 6 of the over 2,000 observations of [[SOCR_Data_Dinov_020108_HeightsWeights |human weight and height included in this SOCR Dataset]]. | |

| − | + | <center> | |

| − | + | {| class="wikitable" style="text-align:center; width:75%" border="1" | |

| − | + | |- | |

| − | + | | Subject Index || Height(<math>x_i</math>) || Weight (<math>y_i</math>) || <math>x_i-\bar{x}</math> || <math>y_i-\bar{y}</math> || <math>(x_i-\bar{x})^2</math> || <math>(y_i-\bar{y})^2</math> || <math>(x_i-\bar{x})(y_i-\bar{y})</math> | |

| − | + | |- | |

| − | + | | 1 || 167 || 60 || 6 || 4.67 || 36 || 21,82 || 28.02 | |

| − | + | |- | |

| − | + | | 2 || 170 || 64 || 9 || 8.67 || 81 || 75.17 || 78.03 | |

| + | |- | ||

| + | | 3 || 160 || 57 || -1 || 1.67 || 1 || 2.79 || -1.67 | ||

| + | |- | ||

| + | | 4 || 152 || 46 || -9 || -9.33 || 81 || 87.05 || 83.97 | ||

| + | |- | ||

| + | | 5 || 157 || 55 || -4 || -0.33 || 16 || 0.11 || 1.32 | ||

| + | |- | ||

| + | | 6 || 160 || 50 || -1 || -5.33 || 1 || 28.41 || 5.33 | ||

| + | |- | ||

| + | | Total || || || 966 || 332 || 0 || 0 || 216 || 215.33 || 195.0 | ||

| + | |} | ||

| + | </center> | ||

* TBD | * TBD | ||

| + | <center>[[Image:AP_Statistics_Curriculum_2007_IntroVar_Dinov_061407_Fig1.png|500px]]</center> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

<hr> | <hr> | ||

===References=== | ===References=== | ||

| − | |||

<hr> | <hr> | ||

Revision as of 00:14, 17 February 2008

Contents

General Advance-Placement (AP) Statistics Curriculum - Correlation

Many biomedical, social, engineering and science applications involve the analysis of relationships, if any, between two or more variables involved in the process of interest. We begin with the simplest of all situations where bivariate data (X and Y) are measured for a process and we are interested on determining the association, relation or an appropriate model for these observations (e.g., fitting a straight line to the pairs of (X,Y) data). If we are successful determining a relationship between X and Y, we can use this model to make predictions - i.e., given a value of X predict a corresponding Y response. Note that in this design, data consists of paired observations (X,Y) - for example, the height and weight of individuals.

Lines in 2D

There are 3 types of lines in 2D planes - Vertical Lines, Horizontal Lines and Oblique Lines. In general, the mathematical representation of lines in 2D is given by equations like \(aX + bY=c\), most frequently expressed as \(Y=aX + b\), provides the line is not vertical.

Recall that there is a one-to-one correspondence between any line in 2D and (linear) equations of the form

- If the line is vertical (\(X_1 =X_2\))\[X=X_1\];

- If the line is horizontal (\(Y_1 =Y_2\))\[Y=Y_1\];

- Otherwise (oblique line)\[{Y-Y_1 \over Y_2-Y_1}= {X-X_1 \over X_2-X_1}\], (for \(X_1\not=X_2\) and \(Y_1\not=Y_2\))

where \((X_1,Y_1)\) and \((X_2, Y_2)\) are two points on the line of interest (2-distinct points in 2D determine a unique line).

- Try drawing the following lines manually and using this applet:

- Y=2X+1

- Y=-3X-5

The Correlation Coefficient

Correlation coefficient (\(-1 \leq \rho \leq 1\)) is a measure of linear association, or clustering around a line of multivariate data. The main relationship between two variables (X, Y) can be summarized by\[(\mu_X, \sigma_X)\], \((\mu_Y, \sigma_Y)\) and the correlation coefficient, denoted by \(\rho=\rho_{(X,Y)}=R(X,Y)\).

- If \(\rho=1\), we have a perfect positive correlation (straight line relationship between the two variables)

- If \(\rho=0\), there is no correlation (random cloud scatter), i.e., no linear relation between X and Y.

- If \(\rho = -1\), there is a perfect negative correlation between the variables.

Computing \(\rho=R(X,Y)\)

The protocol for computing the correlation involves standardizing, multiplication and averaging.

- In general, for any random variable:

\[\rho_{X,Y}={\mathrm{COV}(X,Y) \over \sigma_X \sigma_Y} ={E((X-\mu_X)(Y-\mu_Y)) \over \sigma_X\sigma_Y},\] where E is the expected value operator and COV means covariance. Since μX = E(X), σX2 = E(X2) − E2(X) and similarly for Y, we may also write

\[\rho_{X,Y}=\frac{E(XY)-E(X)E(Y)}{\sqrt{E(X^2)-E^2(X)}~\sqrt{E(Y^2)-E^2(Y)}}.\]

- Sample correlation - we only have sampled data - we replace the (unknown) expectations and standard deviations by their sample analogues (sample-mean and sample-standard deviation) to compute the sample correlation correlation:

- Suppose {\(X_1, X_2, X_3, \cdots, X_n\)} and {\(Y_1, Y_2, Y_3, \cdots, Y_n\)} are bivariate observations of the same process and \((\mu_X, \sigma_X)\) and \((\mu_Y, \sigma_Y)\) are the means and standard deviations for the X and Y measurements, respectively.

\[ r_{xy}=\frac{\sum x_iy_i-n \bar{x} \bar{y}}{(n-1) s_x s_y}=\frac{n\sum x_iy_i-\sum x_i\sum y_i} {\sqrt{n\sum x_i^2-(\sum x_i)^2}~\sqrt{n\sum y_i^2-(\sum y_i)^2}}. \]

\[ r_{xy}=\frac{\sum (x_i-\bar{x})(y_i-\bar{y})}{(n-1) s_x s_y} = {1 \over n-1} \sum_{i=1}^n { {x_i-\bar{x} \over s_x} {y_i-\bar{y}\over s_y}}, \]

where \(\bar{x}\) and \(\bar{y}\) are the sample means of X and Y , sx and sy are the sample standard deviations of X and Y and the sum is from i = 1 to n. We may rewrite this as

\[ r_{xy}=\frac{\sum x_iy_i-n \bar{x} \bar{y}}{(n-1) s_x s_y}=\frac{n\sum x_iy_i-\sum x_i\sum y_i} {\sqrt{n\sum x_i^2-(\sum x_i)^2}~\sqrt{n\sum y_i^2-(\sum y_i)^2}}. \]

- Note: The correlation is defined only if both of the standard deviations are finite and both of them are nonzero. It is a corollary of the Cauchy-Schwarz inequality that the correlation is always bound \(-1 \leq \rho \leq 1\).

Examples

Human weight and height

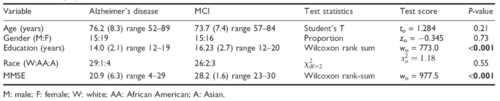

Suppose we took only 6 of the over 2,000 observations of human weight and height included in this SOCR Dataset.

| Subject Index | Height(\(x_i\)) | Weight (\(y_i\)) | \(x_i-\bar{x}\) | \(y_i-\bar{y}\) | \((x_i-\bar{x})^2\) | \((y_i-\bar{y})^2\) | \((x_i-\bar{x})(y_i-\bar{y})\) | ||

| 1 | 167 | 60 | 6 | 4.67 | 36 | 21,82 | 28.02 | ||

| 2 | 170 | 64 | 9 | 8.67 | 81 | 75.17 | 78.03 | ||

| 3 | 160 | 57 | -1 | 1.67 | 1 | 2.79 | -1.67 | ||

| 4 | 152 | 46 | -9 | -9.33 | 81 | 87.05 | 83.97 | ||

| 5 | 157 | 55 | -4 | -0.33 | 16 | 0.11 | 1.32 | ||

| 6 | 160 | 50 | -1 | -5.33 | 1 | 28.41 | 5.33 | ||

| Total | 966 | 332 | 0 | 0 | 216 | 215.33 | 195.0 |

- TBD

References

- SOCR Home page: http://www.socr.ucla.edu

Translate this page: