Difference between revisions of "Scientific Methods for Health Sciences"

(→Ubiquitous Variation) |

(→Theory) |

||

| Line 121: | Line 121: | ||

5) Software | 5) Software | ||

| − | http://www.socr.ucla.edu/htmls/SOCR_Charts.html | + | *[http://www.socr.ucla.edu/htmls/SOCR_Charts.html SOCR Chart Demo] |

| − | http://www.r-bloggers.com/exploratory-data-analysis-useful-r-functions-for-exploring-a-data-frame/ | + | *[http://www.r-bloggers.com/exploratory-data-analysis-useful-r-functions-for-exploring-a-data-frame/ R-Bloggers] |

6) Problems | 6) Problems | ||

Revision as of 14:31, 29 July 2014

The Scientific Methods for Health Sciences EBook is still under active development. It is expected to be complete by Sept 01, 2014, when this banner will be removed.

Contents

- 1 SOCR Wiki: Scientific Methods for Health Sciences

- 2 Preface

- 3 Chapter I: Fundamentals

- 3.1 Exploratory Data Analysis, Plots and Charts

- 3.2 Overview

- 3.3 Motivation

- 3.4 Theory

- 3.5 Ubiquitous Variation

- 3.6 Overview

- 3.7 Motivation

- 3.8 Theory

- 3.9 Applications

- 3.10 Software

- 3.11 Problems

- 3.12 Parametric Inference

- 3.13 Probability Theory

- 3.14 Odds Ratio/Relative Risk

- 3.15 Probability Distributions

- 3.16 Resampling and Simulation

- 3.17 Design of Experiments

- 3.18 Intro to Epidemiology

- 3.19 Experiments vs. Observational Studies

- 3.20 Estimation

- 3.21 Hypothesis Testing

- 3.22 Statistical Power, Sensitivity and Specificity

- 3.23 Data Management

- 3.24 Bias and Precision

- 3.25 Association and Causality

- 3.26 Rate-of-change

- 3.27 Clinical vs. Statistical Significance

- 3.28 Correction for Multiple Testing

- 4 Chapter II: Applied Inference

- 4.1 Epidemiology

- 4.2 Correlation and Regression (ρ and slope inference, 1-2 samples)

- 4.3 ROC Curve

- 4.4 ANOVA

- 4.5 Non-parametric inference

- 4.6 Instrument Performance Evaluation: Cronbach's α

- 4.7 Measurement Reliability and Validity

- 4.8 Survival Analysis

- 4.9 Decision Theory

- 4.10 CLT/LLNs – limiting results and misconceptions

- 4.11 Association Tests

- 4.12 Bayesian Inference

- 4.13 PCA/ICA/Factor Analysis

- 4.14 Point/Interval Estimation (CI) – MoM, MLE

- 4.15 Study/Research Critiques

- 4.16 Common mistakes and misconceptions in using probability and statistics, identifying potential assumption violations, and avoiding them

- 5 Chapter III: Linear Modeling

- 5.1 Multiple Linear Regression (MLR)

- 5.2 Generalized Linear Modeling (GLM)

- 5.3 Analysis of Covariance (ANCOVA)

- 5.4 Multivariate Analysis of Variance (MANOVA)

- 5.5 Multivariate Analysis of Covariance (MANCOVA)

- 5.6 Repeated measures Analysis of Variance (rANOVA)

- 5.7 Partial Correlation

- 5.8 Time Series Analysis

- 5.9 Fixed, Randomized and Mixed Effect Models

- 5.10 Hierarchical Linear Models (HLM)

- 5.11 Multi-Model Inference

- 5.12 Mixture Modeling

- 5.13 Surveys

- 5.14 Longitudinal Data

- 5.15 Generalized Estimating Equations (GEE) Models

- 5.16 Model Fitting and Model Quality (KS-test)

- 6 Chapter IV: Special Topics

- 6.1 Scientific Visualization

- 6.2 PCOR/CER methods Heterogeneity of Treatment Effects

- 6.3 Big-Data/Big-Science

- 6.4 Missing data

- 6.5 Genotype-Environment-Phenotype associations

- 6.6 Medical imaging

- 6.7 Data Networks

- 6.8 Adaptive Clinical Trials

- 6.9 Databases/registries

- 6.10 Meta-analyses

- 6.11 Causality/Causal Inference, SEM

- 6.12 Classification methods

- 6.13 Time-series analysis

- 6.14 Scientific Validation

- 6.15 Geographic Information Systems (GIS)

- 6.16 Rasch measurement model/analysis

- 6.17 MCMC sampling for Bayesian inference

- 6.18 Network Analysis

SOCR Wiki: Scientific Methods for Health Sciences

Electronic book (EBook) on Scientific Methods for Health Sciences (coming up ...)

Preface

The Scientific Methods for Health Sciences (SMHS) EBook is designed to support a 4-course training of scientific methods for graduate students in the health sciences.

Format

Follow the instructions in this page to expand, revise or improve the materials in this EBook.

Learning and Instructional Usage

This section describes the means of traversing, searching, discovering and utilizing the SMHS EBook resources in both formal and informal learning setting.

Copyrights

The SMHS EBook is a freely and openly accessible electronic book developed by SOCR and the general community.

Chapter I: Fundamentals

Exploratory Data Analysis, Plots and Charts

Review of data types, exploratory data analyses and graphical representation of information.

Overview

- What is data? Data is a collection of facts, observations or information, such as values or measurements. Data can be numbers, measurements, or even just description of things (meta-data). Data types can be divided into two big categories of quantitative (numerical information) and qualitative data (descriptive information).

- Quantitative data is anything that can be expressed as a number, or quantified. For example, the scores on a math test or weight of girls in the fourth grade are both quantitative data. Quantitative data (discrete or continuous) is often referred to as the measurable data and this type of data allows scientists to perform various arithmetic operations, such as addition, multiplication, functional-evaluation, or to find parameters of a population. There are two major types of quantitative data: discrete and continuous.

- Discrete data results from either a finite, or infinite but countable, possible options for the values present in a given discrete data set and the values of this data type can constitute a sequence of isolated or separated points on the real number line.

- Continuous quantitative data results from infinite and dense possible values that the observations can take on.

- Qualitative data cannot be expressed as a number. Examples may be gender, religious preference. Categorical data (qualitative or nominal) results from placing individuals into groups or categories. Ordinal and qualitative categorical data types both fall into this category.

In statistics, exploratory data analysis (EDA) is an approach to analyze data sets to summarize their main characteristics. Modern statistics regards the graphical visualization and interrogation of data as a critical component of any reliable method for statistical modeling, analysis and interpretation of data. Formally, there are two types of data analysis that should be employed in concert on the same set of data to make a valid and robust inference: graphical techniques and quantitative techniques. We will discuss many of these later, but below is a snapshot of EDA approaches:

- Box plot, Histogram; Multi-vari chart; Run chart; Pareto chart; Scatter plot; Stem-and-leaf plot;

- Parallel coordinates; Odds ratio; Multidimensional scaling; Targeted projection pursuit; Principal component analysis; Multi-linear PCA; Projection methods such as grand tour, guided tour and manual tour.

- Median polish, Trimean.

Motivation

The feel of data comes clearly from the application of various graphical techniques, which serves as a perfect window to human perspective and sense. The primary goal of EDA is to maximize the analyst’s insight into a data set and into the underlying structure of the data set. To get a feel for the data, it is not enough for the analyst to know what is in the data, he or she must also know what is not in the data, and the only way to do that is to draw on our own pattern recognition and comparative abilities in the context of a series of judicious graphical techniques applied to the data. The main objectives of EDA are to:

- Suggest hypotheses about the causes of observed phenomena;

- Assess (parametric) assumptions on which statistical inference will be based;

- Support the selection of appropriate statistical tools and techniques;

- Provide a basis for further data collection through surveys and experiments.

Theory

Many EDA techniques have been proposed, validated and adopted for various statistical methodologies. Here is an introduction to some of the frequently used EDA charts and the quantitative techniques.

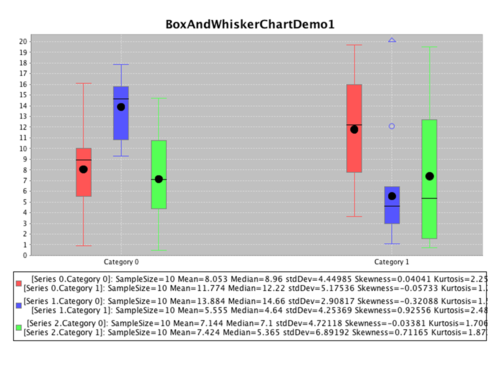

- Box-and-Whisker plot: It is an efficient way for presenting data, especially for comparing multiple groups of data. In the box plot, we can mark-off the five-number summary of a data set (minimum, 25th percentile, median, 75th percentile, maximum). The box contains the 50% of the data. The upper edge of the box represents the 75th percentile, while the lower edge is the 25th percentile. The median is represented by a line drawn in the middle of the box. If the median is not in the middle of the box then the data are skewed. The ends of the lines (whiskers) represent the minimum and maximum values of the data set, unless there are outliers. Outliers are observations below \( Q_1-1.5(IQR) \) or above \( Q_3+1.5(IQR) \), where \( Q_1 \) is the 25th percentile, \( Q_3 \) is the 75th percentile, and \( IQR=Q_3-Q_1 \) (the interquartile range). The advantage of a box plot is that it provides graphically the location and the spread of the data set, it provides an idea about the skewness of the data set, and can provide a comparison between variables by constructing a side-by-side box plots.

- Histogram: It is a graphical visualization of tabulated frequencies or counts of data within equal spaced partition of the range of the data. It shows what proportion of measurements that fall into each of the categories defined by the partition of the data range space.

Comment: Compare the two series from the histogram above, we can easily tell that the pattern of series 2 if more obvious compared to series 1. Our intuition may come from: series 1 has more extreme values across five days, for example, the values for Jan 1st and Jan 3rd are extremely high (almost 55 for Jan 1st) while that for Jan 4th is almost -12. However values for series 2 are all above 0 and fluctuated between 5 and 20.

Comment: The Dot chart above gives a clear picture of the values of all the data points and makes the fundamental measurements easily readable. We can tell that most of the values of the data fluctuate between 1 and 7 with mean 3.9 and median 4. There are two obvious outliers valued -2 and 10.

- Scatter plot: It uses Cartesian coordinates to display values for two variables for a set of data, which is displayed as a collection of points. The value of variable is determined by the position on the horizontal and vertical axis.

Comment: The x and y axes display values for two variables and all the data points drawn in the chart are coordinates indicating a pair of values for both variables.

For the first series, all the data points lie on and above the diagonal line so with increasing x variable, the paired y variable increases faster or equal to x variable. We can infer a positive linear association between X and Y.

For the second series, most data located along the line except for two outliers (4,8) and (1,5). So for most data points, with increasing x variable, the paired y variable decreases slower or equal to x. We may infer a negative linear association between X and Y.

For the third series, we can’t draw a line association between X and Y, instead, a quadratic pattern would work better here.

- QQ Plot: (Quantile - Quantile Plot) The observed values are plotted against theoretical quantiles in QQ charts. A line of good fit is drawn to show the behavior of the data values against the theoretical distribution. If F() is a cumulative distribution function, then a quantile (q), also known as a percentile, is defined as a solution to the equation \(F(q)=p\),that is \(q=F^{-1}(p)\).

Comment: From the chart above, we can see that the data follows a normal distribution in general given all the data points (noted in red) located along side the line. However, the data doesn’t follow a normal distribution tightly because there are data points located pretty far from the line. We can also infer that the sampled data may not be representative enough of the population because of the limited size of the sample.

Median polish: An EDA procedure proposed by John Tukey. It finds an additively fit model for data in a two-way layout table of the form row effect + column effect + overall mean. It is an iterative algorithm for removing any trends by computing medians for various coordinates on the spatial domain D. The median polish algorithm assumes: m(s) = grand effect + row(s) + column(s).

- Steps in Algorithm:

- Take the median of each row and record the value to the side of the row. Subtract the row median from each point in that particular row.

- Compute the median of the row medians, and record the value as the grand effect. Subtract this grand effect from each of the row medians.

- Take the median of each column and record the value beneath the column. Subtract the column median from each point in that particular column.

- Compute the median of the column medians, and add the value to the current grand effect. Subtract this addition to the grand effect from each of the column medians.

- Repeat steps 1-4 until no changes occur with the row or column medians.

- Trimean: It is a measure of a probability distribution’s location defined as a weighted average of the distribution’s median and its two quartiles. It combines the median’s emphasis on center values with the midhinge’s attention to the extremes. And it is a remarkably efficient estimator of population mean especially for large data set (say more than 100 points) from a symmetric population.

4) Applications

4.1) This article introduced a through introduction to EDA. It talked about the basic concept, objectives, techniques about EDA. It also includes case studies for application of EDA. In the case study part, it studies on eight kinds of charts for univariate variable, reliability as well as multi-factor study. The article gives specific examples with background, output and interpretations of results and would be a great source for studying on EDA and charts.

4.2 This article starts with a general introduction to data analysis and works on EDA using examples applied in different graphical analysis. This article should serve as a more basic and general introduction to this concept and would be a good start of studying EDA and charts.

4.3) The SOCR Motion Charts Project enables complex data visualization, see the [http://socr.umich.edu/HTML5/MotionChart/ SOCR MotionChart webapp. The SOCR Motion Charts provide an interactive infrastructure for discovery-based exploratory analysis of multivariate data.

Now, we want to explore the relation between two variables in the dataset: UR (Unemployment Rate) and HPI (Housing Price Index) in the state of Alabama over 2000 to 2006. First, how is the UR in Alabama from 2000 to 2006?

From this chart, we can see that the UR of Alabama increases from 2000 to 2003 then decreases sharply from 2004 to 2006. So you may wonder what is UR for states from other part of the country over the same period?

It seems like they all follow similar patterns over the years. So we want to study on any existing patterns between UR and HPI in a single state, say Alabama over the years.

The chart above informs: HPI increases over the years for Alabama while UR increases first before a sharp drop between 2004 and 2006. Clearly, there exists a quadratic association, if there is any between UR and HPI instead of a linear association. Similarly, if we extend the graph to all three states from different region, we have the following chart:

So, the question could be: is there any association between UR and HPI among all states based on the chart?

The motion chart, however, makes the study much more interesting by exhibiting a moving chart with UR vs. HPI of 51 states from different areas over 2000 to 2006. So we can get an idea of the changing of scores over the years among all states. You’re welcomed to play with the data to see how the chart changes using the link listed above.

5) Software

6) Problems

6.1) Work on problems in Uniform Random Numbers and Random Walk from the Case Studies part in http://www.itl.nist.gov/div898/handbook/eda/section4/eda42.htm .

Two random samples were taken to determine backpack load difference between seniors and freshmen, in pounds. The following are the summaries

| Year | Mean | SD | Median | Min | Max | Range | Count |

| Freshmen | 20.43 | 4.21 | 17.2 | 5.78 | 31.68 | 25.9 | 115 |

| Senior | 18.67 | 4.21 | 18.67 | 5.31 | 27.66 | 22.35 | 157 |

6.2) Which of the following plots would be the most useful in comparing the two sets of backpack weights? Choose One Answer:

(a) Histograms

(b) Dot Plots

(c) Scatter Plots

(d) Box Plots

6.3) School administrators are interested in examining the relationship between height and GPA. What type of plot should they use to display this relationship? Choose one answer.

(a) box plot

(b) scatter plot

(c) line plot

(d) dot plot

6.4) What would be the most appropriate plot for comparing the heights of the 8th graders from different ethnic backgrounds? Choose one answer.

(a) bar charts

(b) side by side boxplot

(c) histograms

(d) pie charts

6.5) There is a company in which a very small minority of males (3%) receive three times the median salary of males, and a very small minority of females (3%) receive one-third of the median salary of females. What do you expect the side-by-side boxplot of male and female salaries to look like? Choose one answer.

(a) Both boxplots will be skewed and the median line will not be in the middle of any of the boxes.

(b) Both boxplots will be skewed, in the case of the females the median line will be close to the top of the box and in the case of the males the median line will be closer to the bottom of the box.

(c) Need to have the actual data to compare the shape of the boxplots.

(d) Both boxplots will be skewed, in the case of males the median line will be close to the top of the box and for the females the median line will be closer to the bottom of the box.

6.6) A researcher has collected the following information on a random sample of 200 adults in the 40-50 age range: Weight in pounds Heart beats per minute Smoker or non-smoker Single or married

He wants to examine the relationship between: 1) heart beat per minute and weight, and 2) smoking and marital status. Choose one answer.

(a) He should draw a scatter plot of heart beat and weight, and a segmented bar chart of smoking and marital status.

(b) He should draw a side by side boxplot of heart beat and weight and a scatterplot of smoking and marital status.

(c) He should draw a side by side boxplot of smoking and marital status and a segmented bar chart of hear beat and weight.

(d) He should draw a back to back stem and leaf plot of weight and heart beat and examine the cell frequencies in the contingency table for smoking by marital status.

6.7) As part of an experiment in perception, 160 UCLA psych students completed a task on identifying similar objects. On average, the students spent 8.25 minutes with standard deviation of 2.4 minutes. However, the minimum time was 2.3 minutes and one students worked for almost 60 minutes. What is the best description of the histogram of times that students spent on this task? Choose one answer.

(a) The histogram of times could be symmetrical and not normal with major outliers.

(b) The histogram of times could be left skewed, and in case there are any outliers, it is likely that they will be smaller than the mean.

(c) The histogram of times could be right skewed, and in the case of any outliers, it is likely that they will be larger than the mean.

(d) The histogram of times could be normal with no major outliers.

- 7) References

- http://www.itl.nist.gov/div898/handbook/eda/eda.htm

- http://mirlyn.lib.umich.edu/Record/000252958

- http://mirlyn.lib.umich.edu/Record/012841334

- SOCR Home page: http://www.socr.umich.edu

Translate this page:

Ubiquitous Variation

There are many ways to quantify variability, which is present in all natural processes. IV. HS 850: Fundamentals

Overview

In real world, variation exists in almost all the data set. The truth is no matter how controlled the environment is in the protocol or the design, virtually any repeated measurement, observation, experiment, trial, or study is bounded to generate data that varies because of intrinsic (internal to the system) or extrinsic (ambient environment) effects. And the extent to which they are unalike, or vary can be noted as variation. Variation is an important concept in statistics and measuring variability is of special importance in statistic inference. And measure of variation, which is namely measures that provided information on the variation, illustrates the extent to which data are dispersed or spread out. We will introduce several basic measures of variation commonly used in statistics: range, variation, standard deviation, sum of squares, Chebyshev’s theorem and empirical rules.

Motivation

Variation is of significant importance in statistics and it is ubiquitous in data. Consider the example in UCLA’s study of Alzheimer’s disease which analyzed the data of 31 Mild Cognitive Impairment (MCI) and 34 probable Alzheimer’s disease (AD) patients. The investigators made every attempt to control as many variables as possible. Yet, demographic information they collected from the outcomes of the subjects contained unavoidable variation. The same study found variation in the MMSE cognitive scores even in the same subject. The table below shows the demographic characteristics for the subjects and patients included in this study, where the following notation is used M (male), F (female), W (white), AA (African American), A (Asian).

| Variable | Alzhelmer's disease | MCI | Test Statistics | Test Score | P-value |

| Age(years) | 76.2 (8.3) range 52-89 | 73.7 (7.3) range 57-84 | Student’s T | t 0 =1.284 | p=0.21 |

| Gender(M:F) | 15:19 | 15:16 | Proportion | z 0= -0.345 | p=0.733 |

| Education(years) | 14.0 (2.1) range 12-19 | 16.23 (2.7) range 12-20 | Wilcoxon rank sum | w 0 =773.0 | p<0.001 |

| Race(W:AA:A) | 29:1:4 | 26:2:3 | x 2 (df=2) | x 2 (df=2) =1.18 | 0.55 |

| MMSE | 20.9 (6.3) range 4-29 | 28.2 (1.6) range 23-30 | Wilcoxon rank sum | w 0 =977.5 | p<0.001 |

Once we accept that all natural phenomena are inherently variant and there aren’t completely deterministic processes, we need to look for measures of variation that allow us to know the extent to which the data are dispersed. Suppose, for instance, we flip a coin 50 times and get 15 heads and 35 tails. But according to the fundamental probability theory where we assume it’s a fair coin, we should have got 25 heads and 25 tails. So, what happened here? Now, suppose there are 100 students and each one flipped the coin 50 times. So, how would you imagine the results to be?

Theory

Measures of variation:

- 3.1) Range: range is the simplest measure of variation and it is the difference between the largest value and the smallest. Range = Maximum – Minimum.

Suppose the pulse rate of Jack varied from 70 to 76 while that of Tom varied from 58 to 79. Here we have Jack has a range of 76 – 70 = 6 and Tom has a range of 79 – 58 = 21. Hence we conclude that Tom has a big variation in pulse rate compared to Jack with the range measure.

A similar measure of variation covers (more or less) the middle 50 percent. It is the interquartile range:$Q_3-Q_1$ where $Q_1$ and $Q_3$ are the first and third quarters.

- 3.2) Variance: unlike range, which only involves the largest and smallest data, variance involves all the data values.

- Population variance: $σ^2=(∑(x-μ)^2 )/N$ where μ is the population mean of the data and N is the size of the population.

- Unbiased estimate of the population variance:$s^2=(∑(x-x ̅ )^2 )/(n-1)$where $x ̅$ is the sample mean and n is the sample size.

- 3.3) Standard deviation: It is the square root of variance. Given that the deviations in variance were squared, meaning the units were squared, so to take the square root of the variance gets the unit back the same as the original data values.

- Population variance: $σ=√((∑(x-μ)^2 )/N)$ where μ is the population mean of the data and N is the size of the population.

- Unbiased estimate of the population stand deviation (sample standard deviation):$s=√((∑(x-x ̅ )^2 )/(n-1))$where $x ̅$ is the sample mean and n is the sample size.

Consider an example: a biologist found 8, 11, 7, 13, 10, 11, 7 and 9 contaminated mice in 8 groups. Calculate s. $x ̅=(8+11+7+13+10+11+7+9)/8=9.5$

| x | 8 | 11 | 7 | 13 | 10 | 11 | 7 | 9 | |

| $x-x ̅$ | -1.5 | 1.5 | -2.5 | 3.5 | 0.5 | 1.5 | -2.5 | -0.5 | 0 |

| $(x-x ̅ )^2$ | 2.25 | 2.25 | 6.25 | 12.25 | 0.25 | 2.25 | 6.25 | 0.25 | 32 |

$s=√((∑(x-x ̅ )^2 )/(n-1))=32/7≈2.14$

- 3.4) Sum of squares (shortcuts)

The sum of the squares of the deviations from the means is given a shortcut notation and several alternative formulas. $SS(x)=s=∑(x-x ̅ )^2 $

A little algebraic simplification returns: $SS(x)=∑(x^2 -(∑(x)^2/n$

- 3.5) Chebyshev’s Theorem: The proportion of the values that fall within k standard deviations of the mean will be at least $1-1/k^2 $ where k is the number greater than 1. The interpretation of $x ̅-ks$ to $x ̅+ks$ would be within k standard deviations. Chebyshev’s theorem is true for any sample set with any distribution.

- 3.6) Empirical Rule: This rule only works for bell-shaped (normal) distributions. With this kind of distribution, we have: approximately 68% of the data values fall within one standard deviation of the mean; approximately 95% of the data values fall within two standard deviations of the mean; approximately 99.7% of the data values fall within three standard deviations of the mean.

Applications

This article (http://www.nature.com/ng/journal/v39/n7s/full/ng2042.html) titled The Population Genetics of Structural Variation, talked about genomic variation in human genome. It summarized recent dramatic advances and illustrated on the diverse mutational origins of chromosomal rearrangements and argued about their complexity necessitates a re-evaluation of existing population genetic methods. It started with an introduction on genomic variants including their biological significance, their basic characteristics leading to the importance of study on structural variation. It then pointed out the improvements in knowledge of structural variation in human genome compared to the current state of studies in structural variation in human genome and ended with two important future challenges in the study of structural variation.

Software

Problems

6.1) Let X be a random variable with mean 80 and standard deviation 12. Find the mean and the standard deviation of the following variable: X- 20. Choose one answer.

(a) Mean = 60, standard deviation = 144

(b) Mean = 60, standard deviation = 12

(c) Mean = 80, standard deviation = 12

(d) Mean = 60, standard deviation = -8

6.2) A physician collected data on 1000 patients to examine their heights. A statistician hired to look at the files noticed the typical height was about 60 inches, but found that one height was 720 inches. This is clearly an outlier. The physician is out of town and can't be contacted, but the statistician would like to have some preliminary descriptions of the data to present when the doctor returns. Which of the following best describes how the statistician should handle this outlier? Choose one answer.

(a) The statistician should publish a paper on the emergence of a new race of giants.

(b) The statistician should keep the data point in; each point is too valuable to drop one.

(c) The statistician should drop the observation from the analysis because this is clearly a mistake; the person would be 60 feet tall.

(d) The statistician should analyze the data twice, once with and once without this data point, and then compare how the point affects conclusions.

(e) The statistician should drop the observation from the dataset because we can't analyze the data with it.

6.3) Researchers do a study on the number of cars that a person owns. They think that the distribution of their data might be normal, even though the median is much smaller than the mean. They make a p-plot. What does it look like? Choose one answer.

(a) It's not a straight line.

(b) It's a bell curve.

(c) It's a group of points clustered around the middle of the plot.

(d) It's a straight line.

6.4) Which of the following parameters is most sensitive to outliers? Choose one answer.

(a) Standard deviation

(b) Interquartile range

(c) Mode

(d) Median

6.5) Which value given below is the best representative for the following data? 2, 3, 4, 4, 4, 4, 4, 5, 6, 7, 8, 9, 9, 9, 9, 9, 10, 11. Choose one answer.

(a) The weighted average of the two modes or (4*5 + 9*5)/10 = 6.5

(b) No single number could represent this data set

(c) The average of the two modes or (4 + 9) / 2 = 6.5

(d) The mean or (2 + 3 + 4 + … + 10 + 11)/18 = 5.9

(e) The median or (6 + 7)/2 = 6.5

6.6) The following data is collected from website for 121 schools and included these attributes about each institution: name, public or private institution, state, , cost of health insurance, resident tuition, resident fees, resident total expenses, nonresident tuition, nonresident fees, and nonresident total expenses in 2005. So was surprised that medical schools charge no tuition for residents. However, other students pay about $20,000 in fees.

| Min | Q1 | Median | Q3 | Max | |

| Private | $6,550 | $30,729 | $33,850 | $36,685 | $41,360 |

| Public | $0 | $10,219 | $16,168 | $18,800 | $27,886 |

On the same scale, use the 5-Number summary to construct two boxplots for the tuition for residents at 73 public and 48 private medical colleges. Use the data and plots to determine which statement about centers is true.

(a) For private medical schools, the mean tuition of residents is greater than the median tuition for residents.

(b) With these data, we cannot determine the relationship between mean and median tuition for residents.

(c) For private medical schools, the mean tuition of residents is equal to the median tuition for residents.

(d) For private medical schools, the mean tuition of residents is less the median tuition for residents .

6.7) Suppose that we create a new data set by doubling the highest value in a large data set of positive values. What statement is FALSE about the new data set? Choose one answer.

(a) The mean increases

(b) The standard deviation increases

(c) The range increases

(d) The median and interquartile range both increase

6.8) Consider a large data set of positive values and multiply each value by 100. Which of the following statement is true? Choose one answer.

(a) The mean, median, and standard deviation increase

(b) The mean and median increase but the standard deviation is unchanged.

(c) The standard deviation increases but the mean and median are unchanged.

(d) The range and interquartile range are unchanged

7) References

- SOCR Home page: http://www.socr.umich.edu

Translate this page:

Parametric Inference

Foundations of parametric (model-based) statistical inference.

Probability Theory

Random variables, stochastic processes, and events are the core concepts necessary to define likelihoods of certain outcomes or results to be observed. We define event manipulations and present the fundamental principles of probability theory including conditional probability, total and Bayesian probability laws, and various combinatorial ideas.

Odds Ratio/Relative Risk

The relative risk, RR, (a measure of dependence comparing two probabilities in terms of their ratio) and the odds ratio, OR, (the fraction of one probability and its complement) are widely applicable in many healthcare studies.

Probability Distributions

Probability distributions are mathematical models for processes that we observe in nature. Although there are different types of distributions, they have common features and properties that make them useful in various scientific applications.

Resampling and Simulation

Resampling is a technique for estimation of sample statistics (e.g., medians, percentiles) by using subsets of available data or by randomly drawing replacement data. Simulation is a computational technique addressing specific imitations of what’s happening in the real world or system over time without awaiting it to happen by chance.

Design of Experiments

Design of experiments (DOE) is a technique for systematic and rigorous problem solving that applies data collection principles to ensure the generation of valid, supportable and reproducible conclusions.

Intro to Epidemiology

Epidemiology is the study of the distribution and determinants of disease frequency in human populations. This section presents the basic epidemiology concepts. More advanced epidemiological methodologies are discussed in the next chapter.

Experiments vs. Observational Studies

Experimental and observational studies have different characteristics and are useful in complementary investigations of association and causality.

Estimation

Estimation is a method of using sample data to approximate the values of specific population parameters of interest like population mean, variability or 97th percentile. Estimated parameters are expected to be interpretable, accurate and optimal, in some form.

Hypothesis Testing

Hypothesis testing is a quantitative decision-making technique for examining the characteristics (e.g., centrality, span) of populations or processes based on observed experimental data.

Statistical Power, Sensitivity and Specificity

The fundamental concepts of type I (false-positive) and type II (false-negative) errors lead to the important study-specific notions of statistical power, sample size, effect size, sensitivity and specificity.

Data Management

All modern data-driven scientific inquiries demand deep understanding of tabular, ASCII, binary, streaming, and cloud data management, processing and interpretation.

Bias and Precision

Bias and precision are two important and complementary characteristics of estimated parameters that quantify the accuracy and variability of approximated quantities.

Association and Causality

An association is a relationship between two, or more, measured quantities that renders them statistically dependent so that the occurrence of one does affect the probability of the other. A causal relation is a specific type of association between an event (the cause) and a second event (the effect) that is considered to be a consequence of the first event.

Rate-of-change

Rate of change is a technical indicator describing the rate in which one quantity changes in relation to another quantity.

Clinical vs. Statistical Significance

Statistical significance addresses the question of whether or not the results of a statistical test meet an accepted quantitative criterion, whereas clinical significance is answers the question of whether the observed difference between two treatments (e.g., new and old therapy) found in the study large enough to alter the clinical practice.

Correction for Multiple Testing

Multiple testing refers to analytical protocols involving testing of several (typically more then two) hypotheses. Multiple testing studies require correction for type I (false-positive rate), which can be done using Bonferroni's method, Tukey’s procedure, family-wise error rate (FWER), or false discovery rate (FDR).

Chapter II: Applied Inference

Epidemiology

Correlation and Regression (ρ and slope inference, 1-2 samples)

ROC Curve

ANOVA

Non-parametric inference

Instrument Performance Evaluation: Cronbach's α

Measurement Reliability and Validity

Survival Analysis

Decision Theory

CLT/LLNs – limiting results and misconceptions

Association Tests

Bayesian Inference

PCA/ICA/Factor Analysis

Point/Interval Estimation (CI) – MoM, MLE

Study/Research Critiques

Common mistakes and misconceptions in using probability and statistics, identifying potential assumption violations, and avoiding them

Chapter III: Linear Modeling

Multiple Linear Regression (MLR)

Generalized Linear Modeling (GLM)

Analysis of Covariance (ANCOVA)

First, see the ANOVA section above.

Multivariate Analysis of Variance (MANOVA)

Multivariate Analysis of Covariance (MANCOVA)

Repeated measures Analysis of Variance (rANOVA)

Partial Correlation

Time Series Analysis

Fixed, Randomized and Mixed Effect Models

Hierarchical Linear Models (HLM)

Multi-Model Inference

Mixture Modeling

Surveys

Longitudinal Data

Generalized Estimating Equations (GEE) Models

Model Fitting and Model Quality (KS-test)

Chapter IV: Special Topics

Scientific Visualization

PCOR/CER methods Heterogeneity of Treatment Effects

Big-Data/Big-Science

Missing data

Genotype-Environment-Phenotype associations

Medical imaging

Data Networks

Adaptive Clinical Trials

Databases/registries

Meta-analyses

Causality/Causal Inference, SEM

Classification methods

Time-series analysis

Scientific Validation

Geographic Information Systems (GIS)

Rasch measurement model/analysis

MCMC sampling for Bayesian inference

Network Analysis

- SOCR Home page: http://www.socr.umich.edu

Translate this page: